Don't fear the unlabelled: safe deep semi-supervised learning via simple debiasing

Paper and Code

Mar 16, 2022

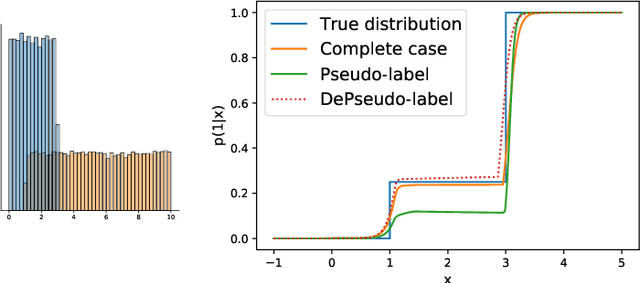

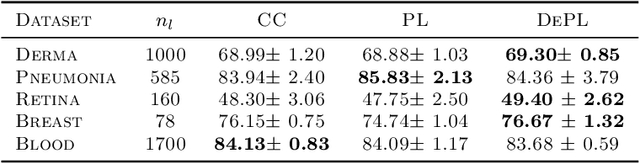

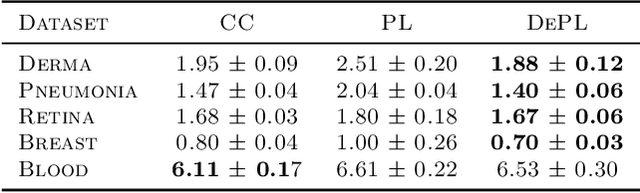

Semi supervised learning (SSL) provides an effective means of leveraging unlabelled data to improve a model's performance. Even though the domain has received a considerable amount of attention in the past years, most methods present the common drawback of being unsafe. By safeness we mean the quality of not degrading a fully supervised model when including unlabelled data. Our starting point is to notice that the estimate of the risk that most discriminative SSL methods minimise is biased, even asymptotically. This bias makes these techniques untrustable without a proper validation set, but we propose a simple way of removing the bias. Our debiasing approach is straightforward to implement, and applicable to most deep SSL methods. We provide simple theoretical guarantees on the safeness of these modified methods, without having to rely on the strong assumptions on the data distribution that SSL theory usually requires. We evaluate debiased versions of different existing SSL methods and show that debiasing can compete with classic deep SSL techniques in various classic settings and even performs well when traditional SSL fails.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge