Domain Generalization via Contrastive Causal Learning

Paper and Code

Oct 06, 2022

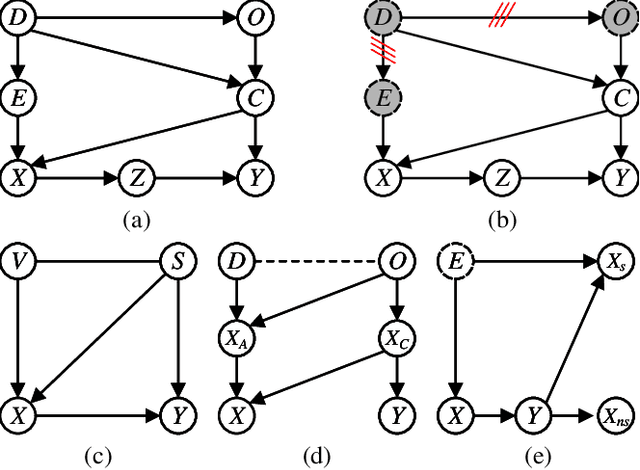

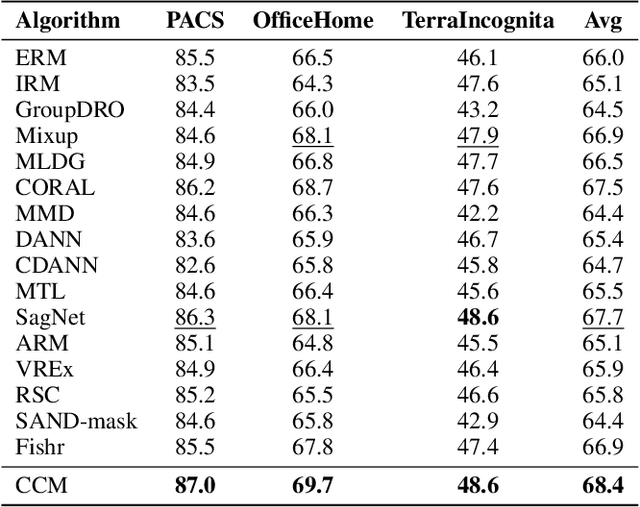

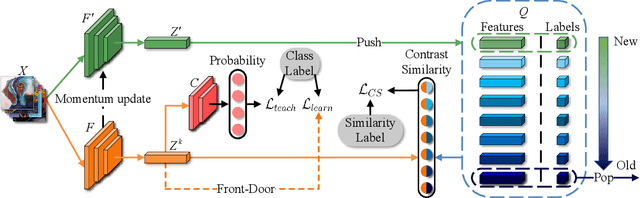

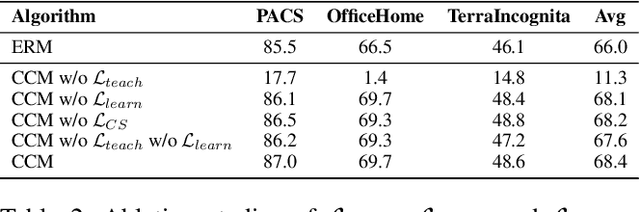

Domain Generalization (DG) aims to learn a model that can generalize well to unseen target domains from a set of source domains. With the idea of invariant causal mechanism, a lot of efforts have been put into learning robust causal effects which are determined by the object yet insensitive to the domain changes. Despite the invariance of causal effects, they are difficult to be quantified and optimized. Inspired by the ability that humans adapt to new environments by prior knowledge, We develop a novel Contrastive Causal Model (CCM) to transfer unseen images to taught knowledge which are the features of seen images, and quantify the causal effects based on taught knowledge. Considering the transfer is affected by domain shifts in DG, we propose a more inclusive causal graph to describe DG task. Based on this causal graph, CCM controls the domain factor to cut off excess causal paths and uses the remaining part to calculate the causal effects of images to labels via the front-door criterion. Specifically, CCM is composed of three components: (i) domain-conditioned supervised learning which teaches CCM the correlation between images and labels, (ii) causal effect learning which helps CCM measure the true causal effects of images to labels, (iii) contrastive similarity learning which clusters the features of images that belong to the same class and provides the quantification of similarity. Finally, we test the performance of CCM on multiple datasets including PACS, OfficeHome, and TerraIncognita. The extensive experiments demonstrate that CCM surpasses the previous DG methods with clear margins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge