Domain Adapting Ability of Self-Supervised Learning for Face Recognition

Paper and Code

Feb 26, 2021

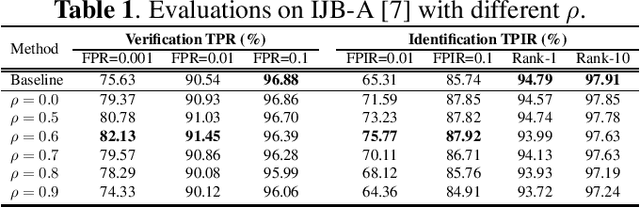

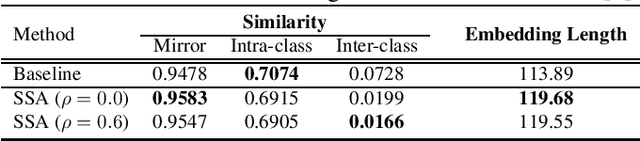

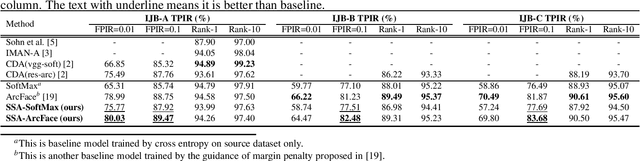

Although deep convolutional networks have achieved great performance in face recognition tasks, the challenge of domain discrepancy still exists in real world applications. Lack of domain coverage of training data (source domain) makes the learned models degenerate in a testing scenario (target domain). In face recognition tasks, classes in two domains are usually different, so classical domain adaptation approaches, assuming there are shared classes in domains, may not be reasonable solutions for this problem. In this paper, self-supervised learning is adopted to learn a better embedding space where the subjects in target domain are more distinguishable. The learning goal is maximizing the similarity between the embeddings of each image and its mirror in both domains. The experiments show its competitive results compared with prior works. To know the reason why it can achieve such performance, we further discuss how this approach affects the learning of embeddings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge