Domain Adaptation without Model Transferring

Paper and Code

Aug 03, 2021

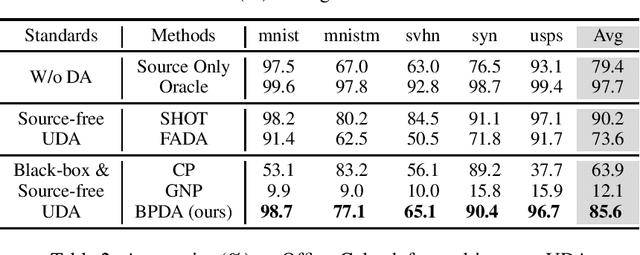

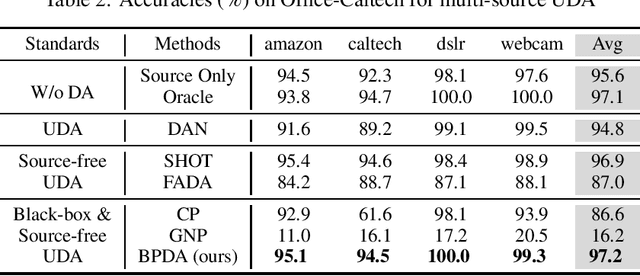

In recent years, researchers have been paying increasing attention to the threats brought by deep learning models to data security and privacy, especially in the field of domain adaptation. Existing unsupervised domain adaptation (UDA) methods can achieve promising performance without transferring data from source domain to target domain. However, UDA with representation alignment or self-supervised pseudo-labeling relies on the transferred source models. In many data-critical scenarios, methods based on model transferring may suffer from membership inference attacks and expose private data. In this paper, we aim to overcome a challenging new setting where the source models cannot be transferred to the target domain. We propose Domain Adaptation without Source Model, which refines information from source model. In order to gain more informative results, we further propose Distributionally Adversarial Training (DAT) to align the distribution of source data with that of target data. Experimental results on benchmarks of Digit-Five, Office-Caltech, Office-31, Office-Home, and DomainNet demonstrate the feasibility of our method without model transferring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge