Does Thermal data make the detection systems more reliable?

Paper and Code

Nov 09, 2021

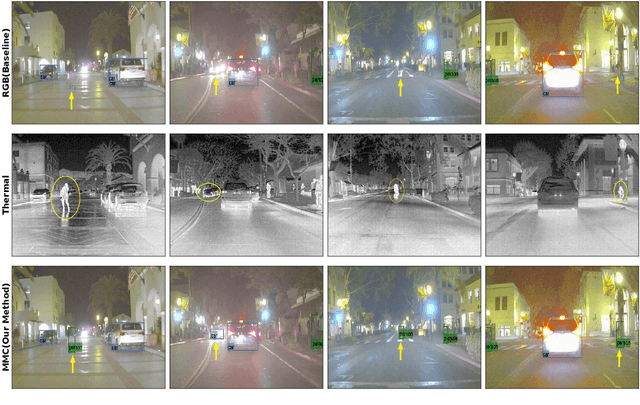

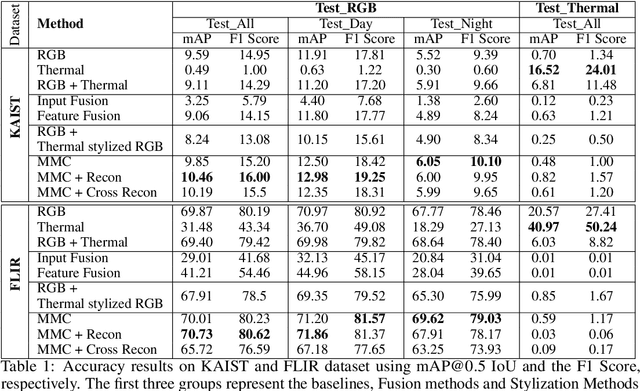

Deep learning-based detection networks have made remarkable progress in autonomous driving systems (ADS). ADS should have reliable performance across a variety of ambient lighting and adverse weather conditions. However, luminance degradation and visual obstructions (such as glare, fog) result in poor quality images by the visual camera which leads to performance decline. To overcome these challenges, we explore the idea of leveraging a different data modality that is disparate yet complementary to the visual data. We propose a comprehensive detection system based on a multimodal-collaborative framework that learns from both RGB (from visual cameras) and thermal (from Infrared cameras) data. This framework trains two networks collaboratively and provides flexibility in learning optimal features of its own modality while also incorporating the complementary knowledge of the other. Our extensive empirical results show that while the improvement in accuracy is nominal, the value lies in challenging and extremely difficult edge cases which is crucial in safety-critical applications such as AD. We provide a holistic view of both merits and limitations of using a thermal imaging system in detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge