Does the brain represent words? An evaluation of brain decoding studies of language understanding

Paper and Code

Jun 02, 2018

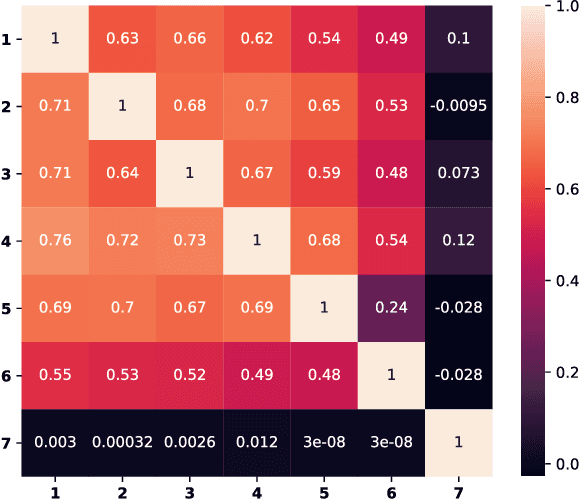

Language decoding studies have identified word representations which can be used to predict brain activity in response to novel words and sentences (Anderson et al., 2016; Pereira et al., 2018). The unspoken assumption of these studies is that, during processing, linguistic information is transformed into some shared semantic space, and those semantic representations are then used for a variety of linguistic and non-linguistic tasks. We claim that current studies vastly underdetermine the content of these representations, the algorithms which the brain deploys to produce and consume them, and the computational tasks which they are designed to solve. We illustrate this indeterminacy with an extension of the sentence-decoding experiment of Pereira et al. (2018), showing how standard evaluations fail to distinguish between language processing models which deploy different mechanisms and which are optimized to solve very different tasks. We conclude by suggesting changes to the brain decoding paradigm which can support stronger claims of neural representation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge