Do language models make human-like predictions about the coreferents of Italian anaphoric zero pronouns?

Paper and Code

Aug 30, 2022

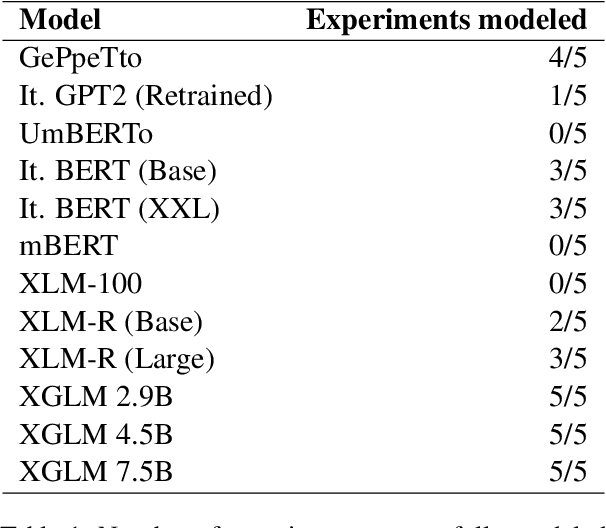

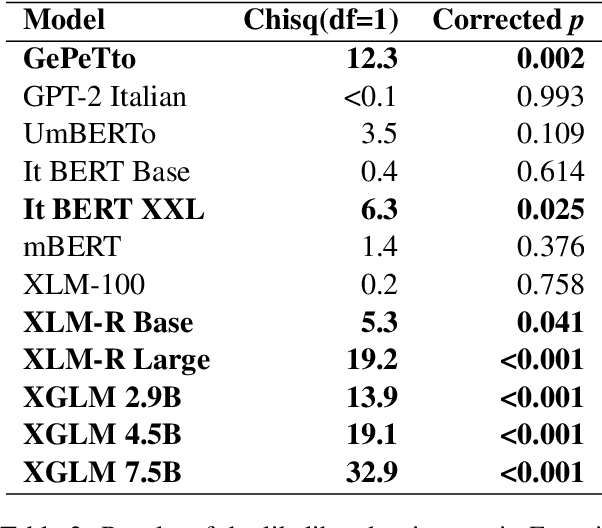

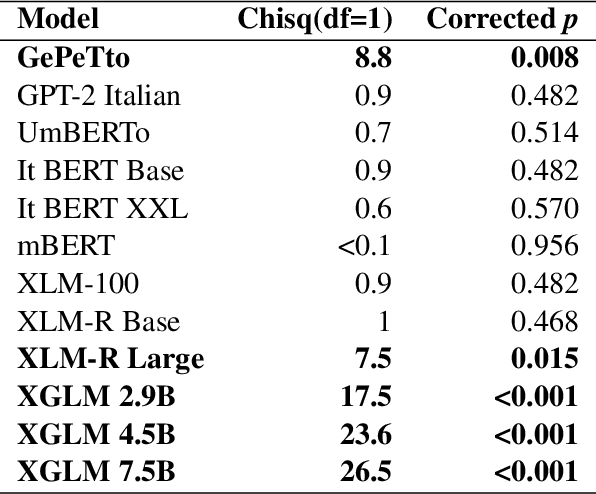

Some languages allow arguments to be omitted in certain contexts. Yet human language comprehenders reliably infer the intended referents of these zero pronouns, in part because they construct expectations about which referents are more likely. We ask whether Neural Language Models also extract the same expectations. We test whether 12 contemporary language models display expectations that reflect human behavior when exposed to sentences with zero pronouns from five behavioral experiments conducted in Italian by Carminati (2005). We find that three models - XGLM 2.9B, 4.5B, and 7.5B - capture the human behavior from all the experiments, with others successfully modeling some of the results. This result suggests that human expectations about coreference can be derived from exposure to language, and also indicates features of language models that allow them to better reflect human behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge