Divide-and-Conquer Dynamics in AI-Driven Disempowerment

Paper and Code

Oct 09, 2023

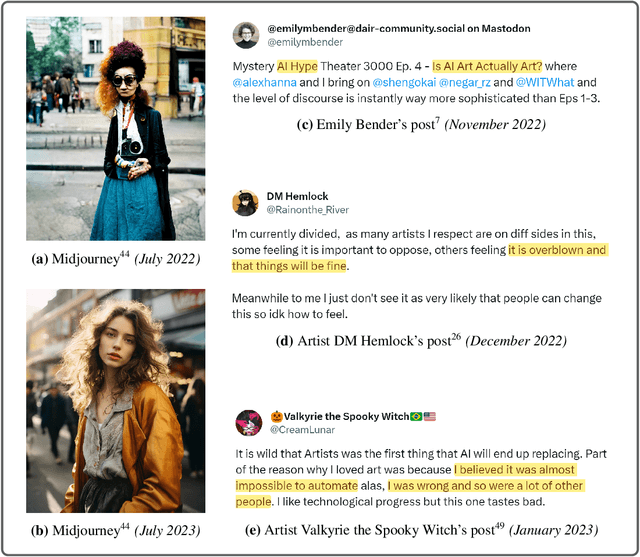

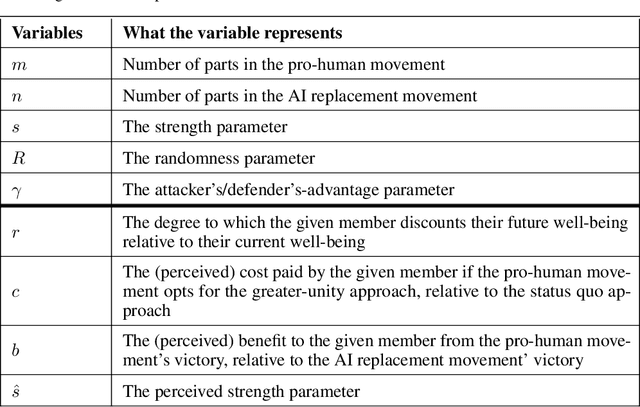

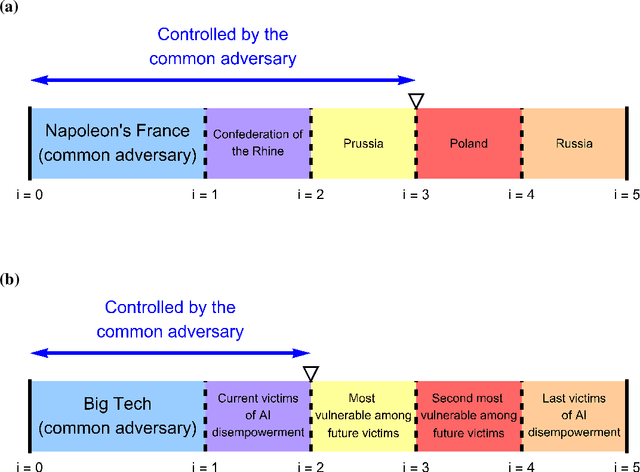

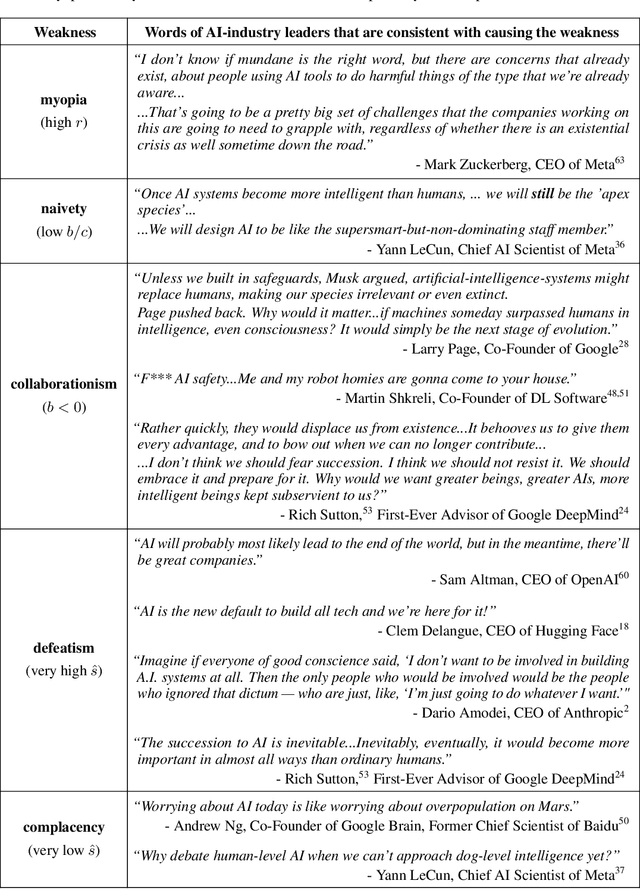

AI companies are attempting to create AI systems that outperform humans at most economically valuable work. Current AI models are already automating away the livelihoods of some artists, actors, and writers. But there is infighting between those who prioritize current harms and future harms. We construct a game-theoretic model of conflict to study the causes and consequences of this disunity. Our model also helps explain why throughout history, stakeholders sharing a common threat have found it advantageous to unite against it, and why the common threat has in turn found it advantageous to divide and conquer. Under realistic parameter assumptions, our model makes several predictions that find preliminary corroboration in the historical-empirical record. First, current victims of AI-driven disempowerment need the future victims to realize that their interests are also under serious and imminent threat, so that future victims are incentivized to support current victims in solidarity. Second, the movement against AI-driven disempowerment can become more united, and thereby more likely to prevail, if members believe that their efforts will be successful as opposed to futile. Finally, the movement can better unite and prevail if its members are less myopic. Myopic members prioritize their future well-being less than their present well-being, and are thus disinclined to solidarily support current victims today at personal cost, even if this is necessary to counter the shared threat of AI-driven disempowerment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge