Divide-and-Conquer based Ensemble to Spot Emotions in Speech using MFCC and Random Forest

Paper and Code

Oct 05, 2016

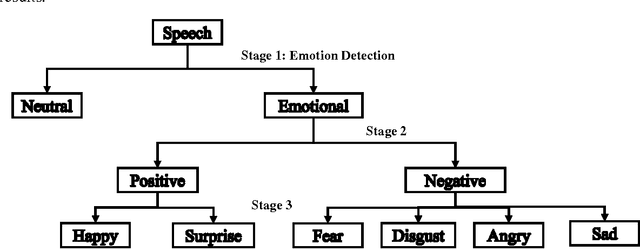

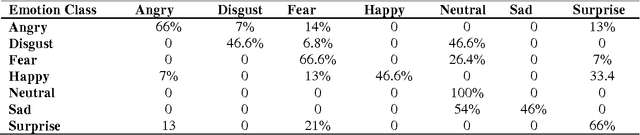

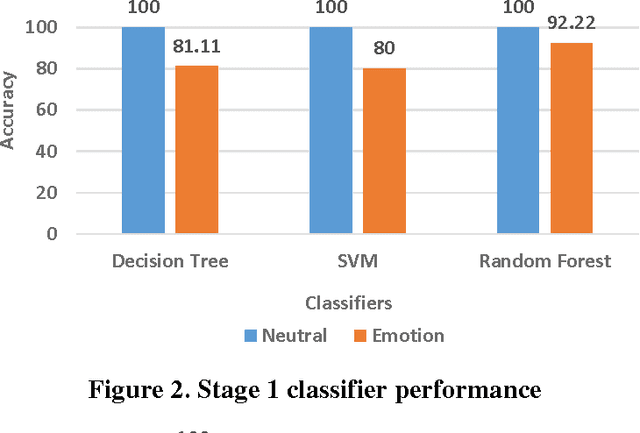

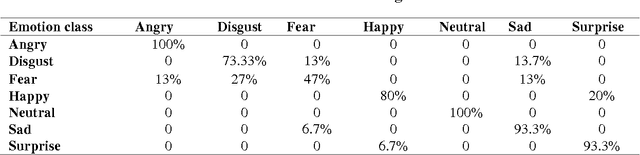

Besides spoken words, speech signals also carry information about speaker gender, age, and emotional state which can be used in a variety of speech analysis applications. In this paper, a divide and conquer strategy for ensemble classification has been proposed to recognize emotions in speech. Intrinsic hierarchy in emotions has been utilized to construct an emotions tree, which assisted in breaking down the emotion recognition task into smaller sub tasks. The proposed framework generates predictions in three phases. Firstly, emotions are detected in the input speech signal by classifying it as neutral or emotional. If the speech is classified as emotional, then in the second phase, it is further classified into positive and negative classes. Finally, individual positive or negative emotions are identified based on the outcomes of the previous stages. Several experiments have been performed on a widely used benchmark dataset. The proposed method was able to achieve improved recognition rates as compared to several other approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge