Distributed Voltage Regulation of Active Distribution System Based on Enhanced Multi-agent Deep Reinforcement Learning

Paper and Code

May 31, 2020

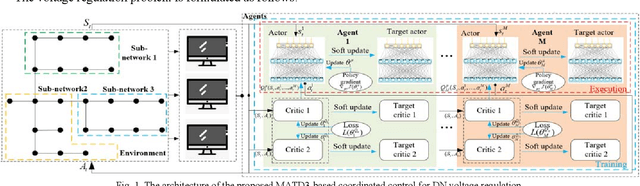

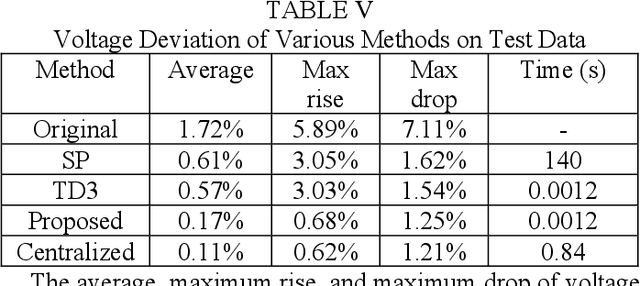

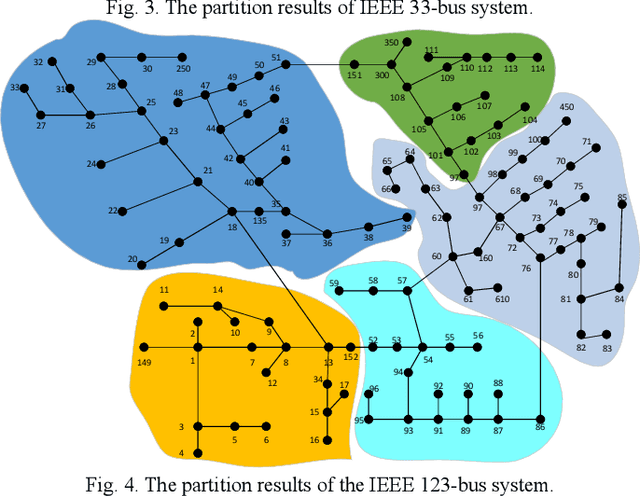

This paper proposes a data-driven distributed voltage control approach based on the spectrum clustering and the enhanced multi-agent deep reinforcement learning (MADRL) algorithm. Via the unsupervised clustering, the whole distribution system can be decomposed into several sub-networks according to the voltage and reactive power sensitivity. Then, the distributed control problem of each sub-network is modeled as Markov games and solved by the enhanced MADRL algorithm, where each sub-network is modeled as an adaptive agent. Deep neural networks are used in each agent to approximate the policy function and the action value function. All agents are centrally trained to learn the optimal coordinated voltage regulation strategy while executed in a distributed manner to make decisions based on only local information. The proposed method can significantly reduce the requirements of communications and knowledge of system parameters. It also effectively deals with uncertainties and can provide online coordinated control based on the latest local information. Comparison results with other existing model-based and data-driven methods on IEEE 33-bus and 123-bus systems demonstrate the effectiveness and benefits of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge