Distributed Memory based Self-Supervised Differentiable Neural Computer

Paper and Code

Jul 21, 2020

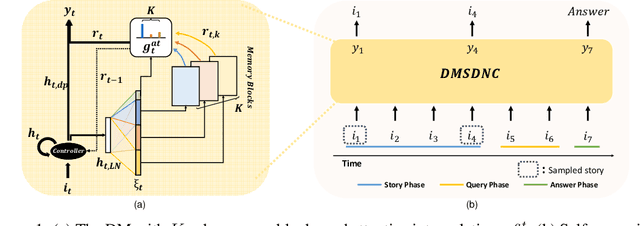

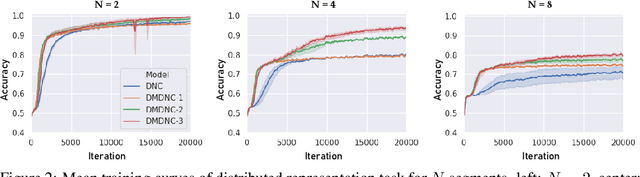

A differentiable neural computer (DNC) is a memory augmented neural network devised to solve a wide range of algorithmic and question answering tasks and it showed promising performance in a variety of domains. However, its single memory-based operations are not enough to store and retrieve diverse informative representations existing in many tasks. Furthermore, DNC does not explicitly consider the memorization itself as a target objective, which inevitably leads to a very slow learning speed of the model. To address those issues, we propose a novel distributed memory-based self-supervised DNC architecture for enhanced memory augmented neural network performance. We introduce (i) a multiple distributed memory block mechanism that stores information independently to each memory block and uses stored information in a cooperative way for diverse representation and (ii) a self-supervised memory loss term which ensures how well a given input is written to the memory. Our experiments on algorithmic and question answering tasks show that the proposed model outperforms all other variations of DNC in a large margin, and also matches the performance of other state-of-the-art memory-based network models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge