Distilling Knowledge from Ensembles of Acoustic Models for Joint CTC-Attention End-to-End Speech Recognition

Paper and Code

May 19, 2020

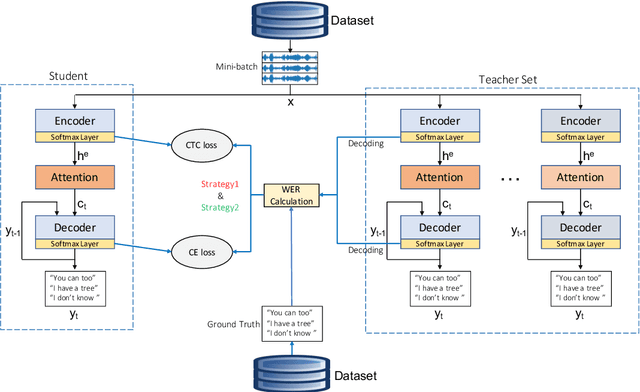

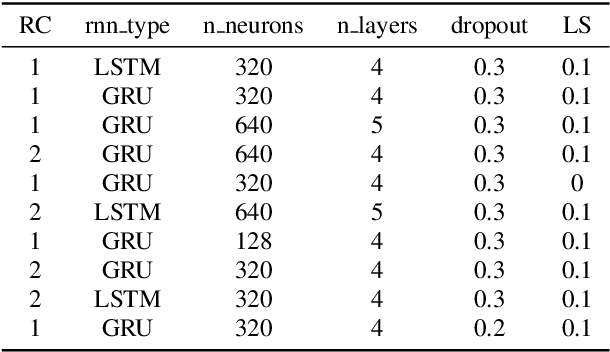

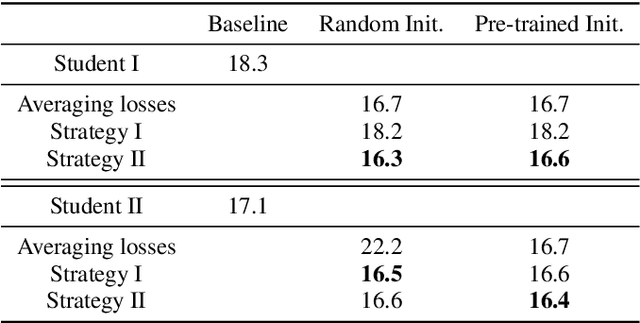

Knowledge distillation has been widely used to compress existing deep learning models while preserving the performance on a wide range of applications. In the specific context of Automatic Speech Recognition (ASR), distillation from ensembles of acoustic models has recently shown promising results in increasing recognition performance. In this paper, we propose an extension of multi-teacher distillation methods to joint ctc-atention end-to-end ASR systems. We also introduce two novel distillation strategies. The core intuition behind both is to integrate the error rate metric to the teacher selection rather than solely focusing on the observed losses. This way, we directly distillate and optimize the student toward the relevant metric for speech recognition. We evaluated these strategies under a selection of training procedures on the TIMIT phoneme recognition task and observed promising error rate for these strategies compared to a common baseline. Indeed, the best obtained phoneme error rate of 16.4% represents a state-of-the-art score for end-to-end ASR systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge