Disentangling Autoencoders (DAE)

Paper and Code

Feb 20, 2022

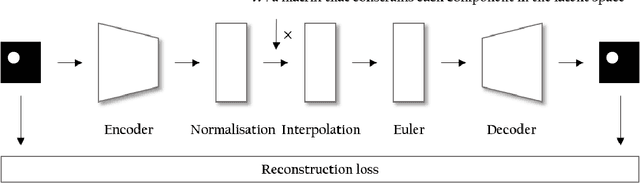

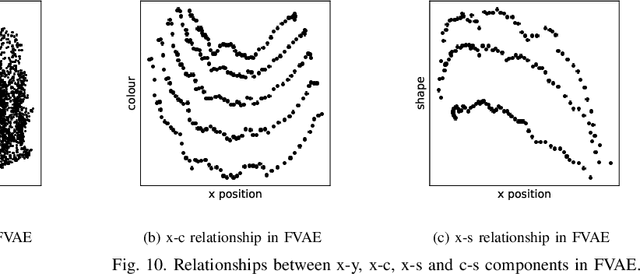

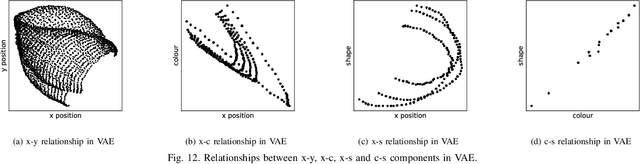

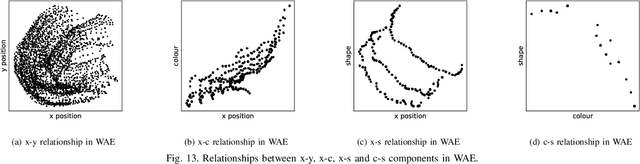

Noting the importance of factorizing or disentangling the latent space, we propose a novel framework for autoencoders based on the principles of symmetry transformations in group-theory, which is a non-probabilistic disentangling autoencoder model. To the best of our knowledge, this is the first model that is aiming to achieve disentanglement based on autoencoders without regularizers. The proposed model is compared to seven state-of-the-art generative models based on autoencoders and evaluated based on reconstruction loss and five metrics quantifying disentanglement losses. The experiment results show that the proposed model can have better disentanglement when variances of each features are different. We believe that this model leads a new field for disentanglement learning based on autoencoders without regularizers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge