Discriminative Feature Learning through Feature Distance Loss

Paper and Code

May 25, 2022

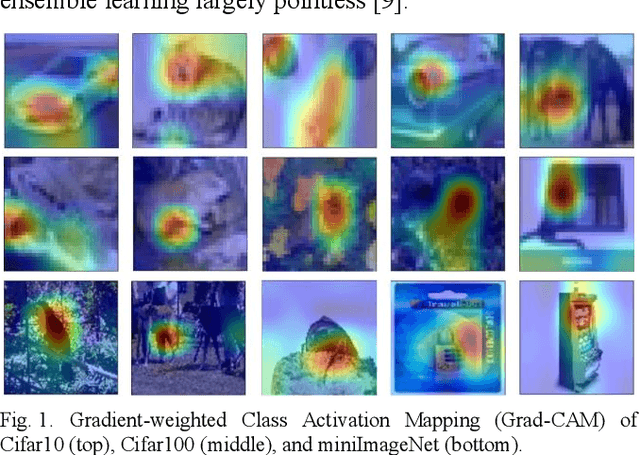

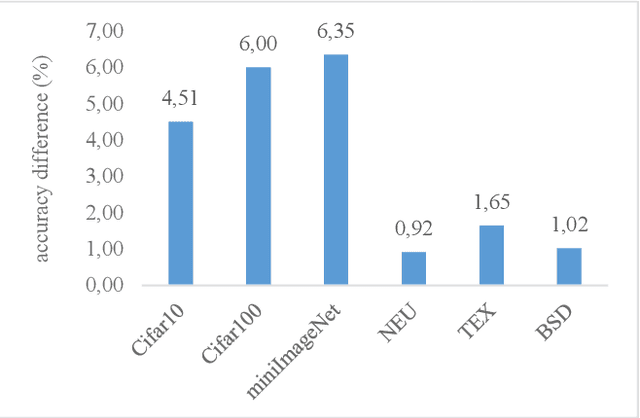

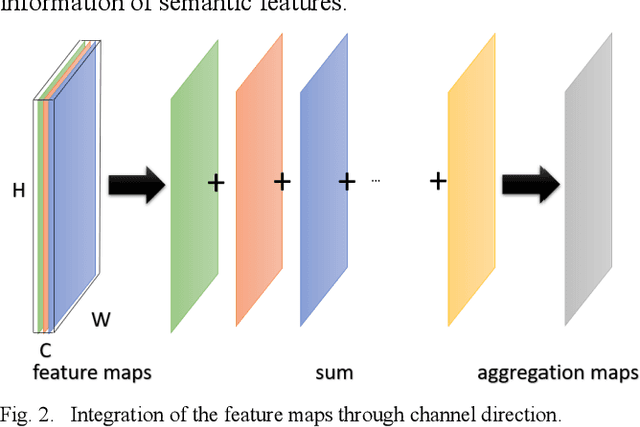

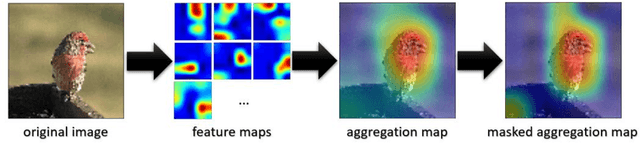

Convolutional neural networks have shown remarkable ability to learn discriminative semantic features in image recognition tasks. Though, for classification they often concentrate on specific regions in images. This work proposes a novel method that combines variant rich base models to concentrate on different important image regions for classification. A feature distance loss is implemented while training an ensemble of base models to force them to learn discriminative feature concepts. The experiments on benchmark convolutional neural networks (VGG16, ResNet, AlexNet), popular datasets (Cifar10, Cifar100, miniImageNet, NEU, BSD, TEX), and different training samples (3, 5, 10, 20, 50, 100 per class) show our methods effectiveness and generalization ability. Our method outperforms ensemble versions of the base models without feature distance loss, and the Class Activation Maps explicitly proves the ability to learn different discriminative feature concepts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge