Direct l_-Norm Learning for Feature Selection

Paper and Code

Apr 02, 2015

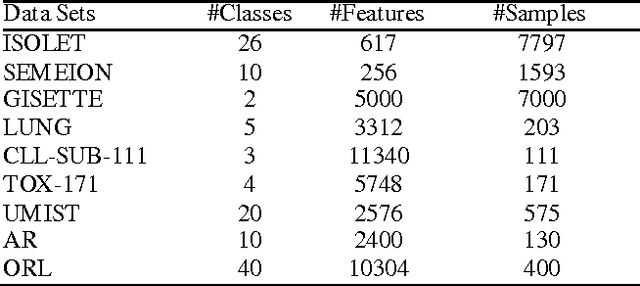

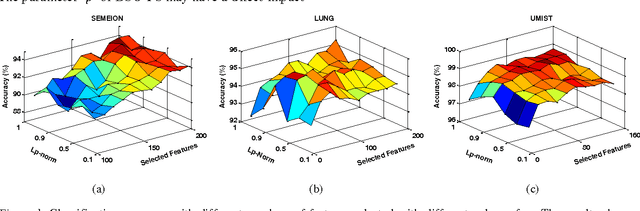

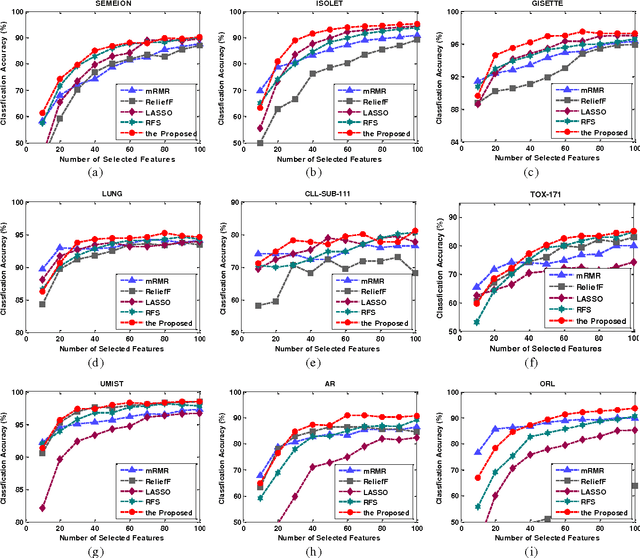

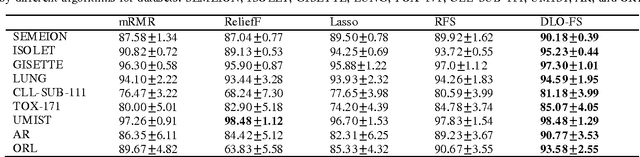

In this paper, we propose a novel sparse learning based feature selection method that directly optimizes a large margin linear classification model sparsity with l_(2,p)-norm (0 < p < 1)subject to data-fitting constraints, rather than using the sparsity as a regularization term. To solve the direct sparsity optimization problem that is non-smooth and non-convex when 0<p<1, we provide an efficient iterative algorithm with proved convergence by converting it to a convex and smooth optimization problem at every iteration step. The proposed algorithm has been evaluated based on publicly available datasets, and extensive comparison experiments have demonstrated that our algorithm could achieve feature selection performance competitive to state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge