Diffusion Restoration Adapter for Real-World Image Restoration

Paper and Code

Feb 28, 2025

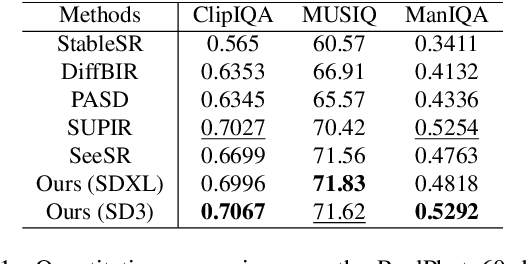

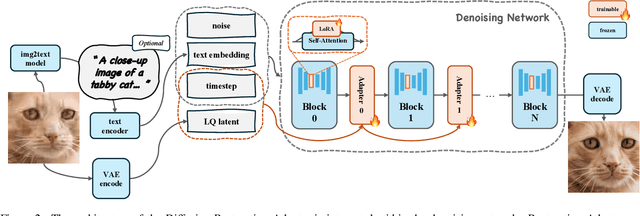

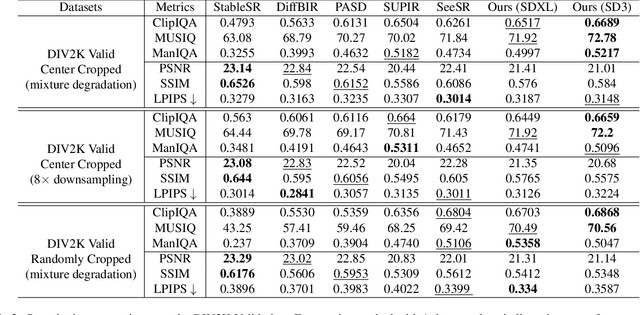

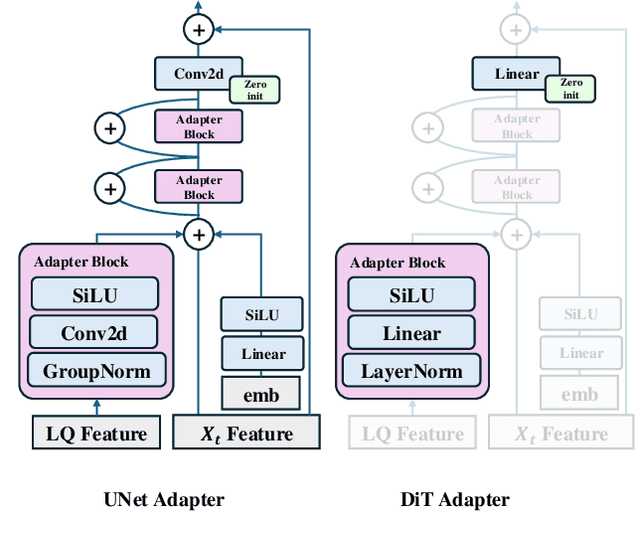

Diffusion models have demonstrated their powerful image generation capabilities, effectively fitting highly complex image distributions. These models can serve as strong priors for image restoration. Existing methods often utilize techniques like ControlNet to sample high quality images with low quality images from these priors. However, ControlNet typically involves copying a large part of the original network, resulting in a significantly large number of parameters as the prior scales up. In this paper, we propose a relatively lightweight Adapter that leverages the powerful generative capabilities of pretrained priors to achieve photo-realistic image restoration. The Adapters can be adapt to both denoising UNet and DiT, and performs excellent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge