Differentiable Causal Computations via Delayed Trace

Paper and Code

Mar 04, 2019

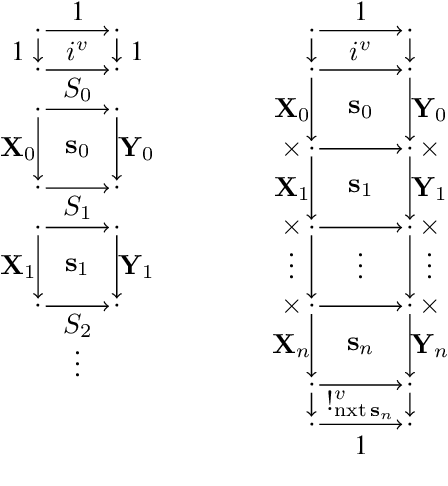

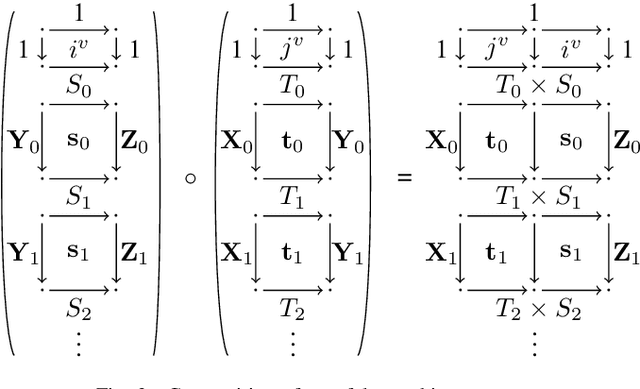

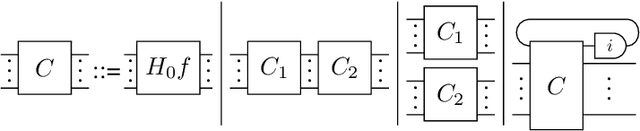

We investigate causal computations taking sequences of inputs to sequences of outputs where the $n$th output depends on the first $n$ inputs only. We model these in category theory via a construction taking a Cartesian category $C$ to another category $St(C)$ with a novel trace-like operation called "delayed trace", which misses yanking and dinaturality axioms of the usual trace. The delayed trace operation provides a feedback mechanism in $St(C)$ with an implicit guardedness guarantee. When $C$ is equipped with a Cartesian differential operator, we construct a differential operator for $St(C)$ using an abstract version of backpropagation through time, a technique from machine learning based on unrolling of functions. This obtains a swath of properties for backpropagation through time, including a chain rule and Schwartz theorem. Our differential operator is also able to compute the derivative of a stateful network without requiring the network to be unrolled.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge