Development of A Stochastic Traffic Environment with Generative Time-Series Models for Improving Generalization Capabilities of Autonomous Driving Agents

Paper and Code

Jun 10, 2020

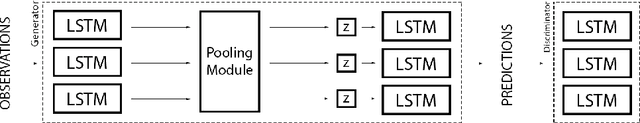

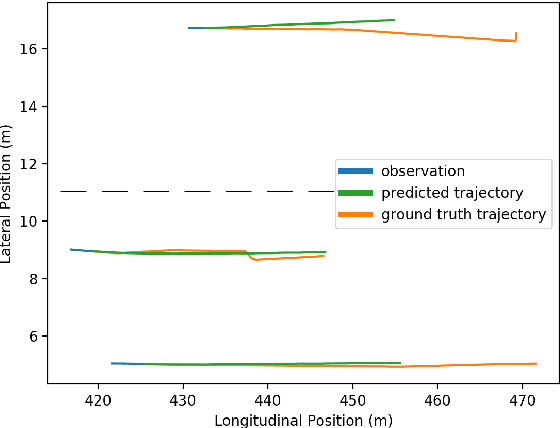

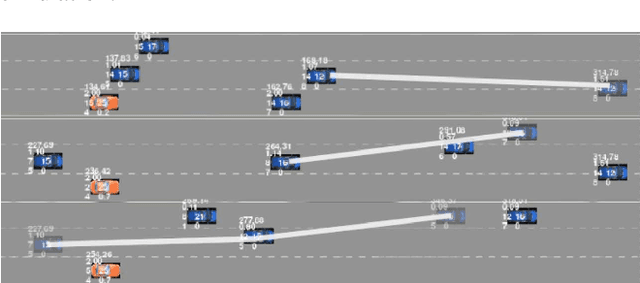

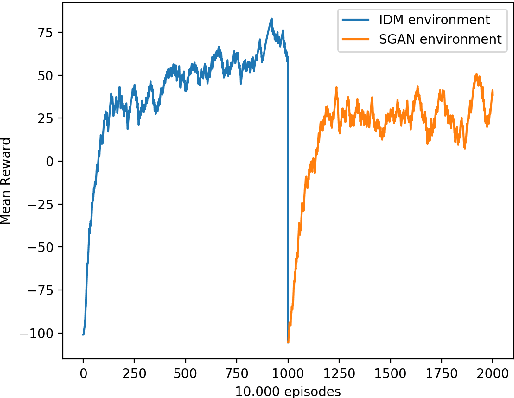

Automated lane changing is a critical feature for advanced autonomous driving systems. In recent years, reinforcement learning (RL) algorithms trained on traffic simulators yielded successful results in computing lane changing policies that strike a balance between safety, agility and compensating for traffic uncertainty. However, many RL algorithms exhibit simulator bias and policies trained on simple simulators do not generalize well to realistic traffic scenarios. In this work, we develop a data driven traffic simulator by training a generative adverserial network (GAN) on real life trajectory data. The simulator generates randomized trajectories that resembles real life traffic interactions between vehicles, which enables training the RL agent on much richer and realistic scenarios. We demonstrate through simulations that RL agents that are trained on GAN-based traffic simulator has stronger generalization capabilities compared to RL agents trained on simple rule-driven simulators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge