Developing a Purely Visual Based Obstacle Detection using Inverse Perspective Mapping

Paper and Code

Sep 04, 2018

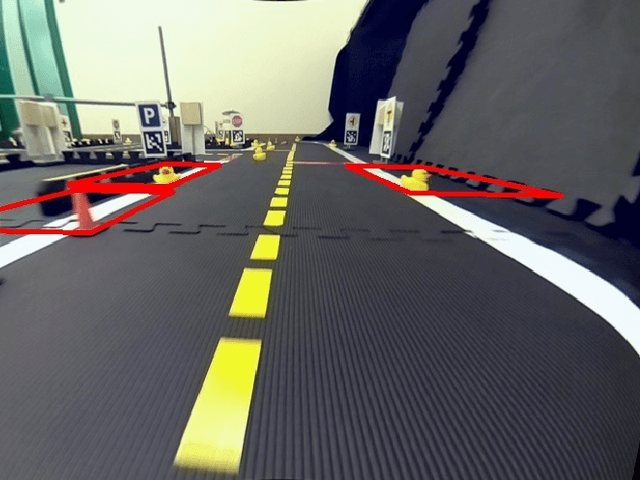

Our solution is implemented in and for the frame of Duckietown. The goal of Duckietown is to provide a relatively simple platform to explore, tackle and solve many problems linked to autonomous driving. "Duckietown" is simple in the basics, but an infinitely expandable environment. From controlling single driving Duckiebots until complete fleet management, every scenario is possible and can be put into practice. So far, none of the existing modules was capable of reliably detecting obstacles and reacting to them in real time. We faced the general problem of detecting obstacles given images from a monocular RGB camera mounted at the front of our Duckiebot and reacting to them properly without crashing or erroneously stopping the Duckiebot. Both, the detection as well as the reaction have to be implemented and have to run on a Raspberry Pi in real time. Due to the strong hardware limitations, we decided to not use any learning algorithms for the obstacle detection part. As it later transpired, a working "hard coded" software needs thorough analysis and understanding of the given problem. In layman's terms, we simply seek to make Duckietown a safer place.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge