Detection-Rate-Emphasized Multi-objective Evolutionary Feature Selection for Network Intrusion Detection

Paper and Code

Jun 13, 2024

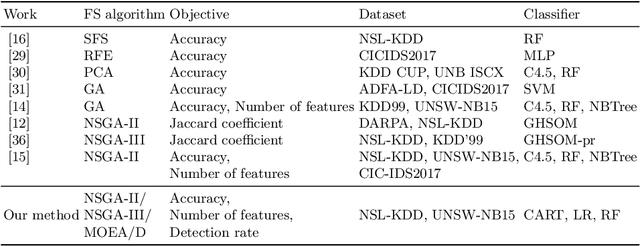

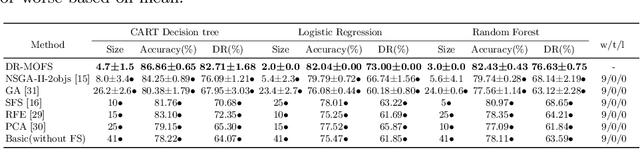

Network intrusion detection is one of the most important issues in the field of cyber security, and various machine learning techniques have been applied to build intrusion detection systems. However, since the number of features to describe the network connections is often large, where some features are redundant or noisy, feature selection is necessary in such scenarios, which can both improve the efficiency and accuracy. Recently, some researchers focus on using multi-objective evolutionary algorithms (MOEAs) to select features. But usually, they only consider the number of features and classification accuracy as the objectives, resulting in unsatisfactory performance on a critical metric, detection rate. This will lead to the missing of many real attacks and bring huge losses to the network system. In this paper, we propose DR-MOFS to model the feature selection problem in network intrusion detection as a three-objective optimization problem, where the number of features, accuracy and detection rate are optimized simultaneously, and use MOEAs to solve it. Experiments on two popular network intrusion detection datasets NSL-KDD and UNSW-NB15 show that in most cases the proposed method can outperform previous methods, i.e., lead to fewer features, higher accuracy and detection rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge