Detecting Misclassification Errors in Neural Networks with a Gaussian Process Model

Paper and Code

Oct 05, 2020

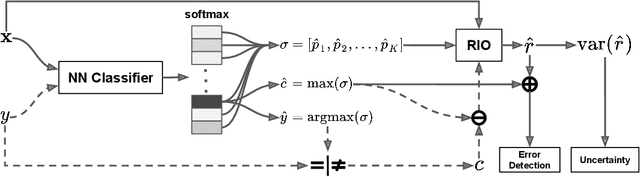

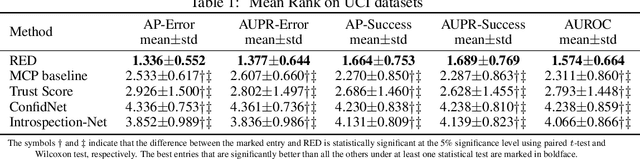

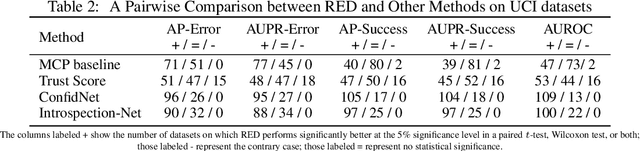

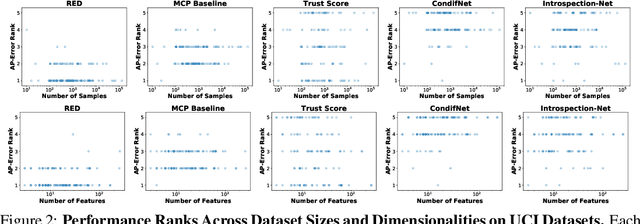

As neural network classifiers are deployed in real-world applications, it is crucial that their predictions are not just accurate, but trustworthy as well. One practical solution is to assign confidence scores to each prediction, then filter out low-confidence predictions. However, existing confidence metrics are not yet sufficiently reliable for this role. This paper presents a new framework that produces more reliable confidence scores for detecting misclassification errors. This framework, RED, calibrates the classifier's inherent confidence indicators and estimates uncertainty of the calibrated confidence scores using Gaussian Processes. Empirical comparisons with other confidence estimation methods on 125 UCI datasets demonstrate that this approach is effective. An experiment on a vision task with a large deep learning architecture further confirms that the method can scale up, and a case study involving out-of-distribution and adversarial samples shows potential of the proposed method to improve robustness of neural network classifiers more broadly in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge