Design Technology Co-Optimization for Neuromorphic Computing

Paper and Code

Oct 15, 2021

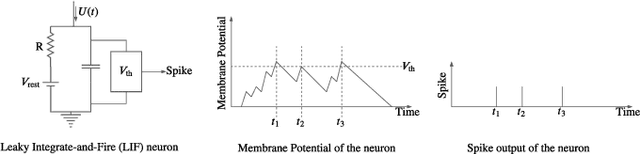

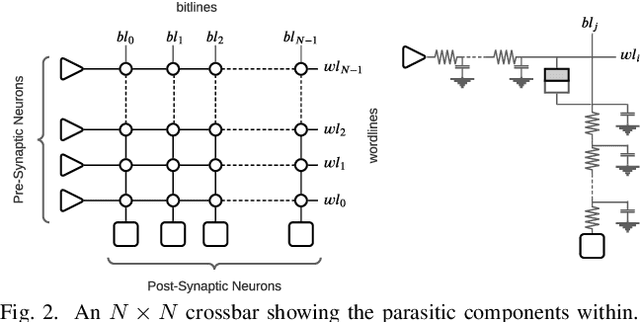

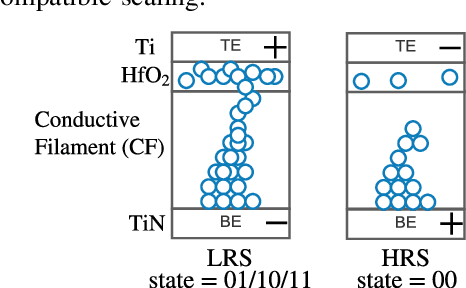

We present a design-technology tradeoff analysis in implementing machine-learning inference on the processing cores of a Non-Volatile Memory (NVM)-based many-core neuromorphic hardware. Through detailed circuit-level simulations for scaled process technology nodes, we show the negative impact of design scaling on read endurance of NVMs, which directly impacts their inference lifetime. At a finer granularity, the inference lifetime of a core depends on 1) the resistance state of synaptic weights programmed on the core (design) and 2) the voltage variation inside the core that is introduced by the parasitic components on current paths (technology). We show that such design and technology characteristics can be incorporated in a design flow to significantly improve the inference lifetime.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge