Descending through a Crowded Valley - Benchmarking Deep Learning Optimizers

Paper and Code

Jul 07, 2020

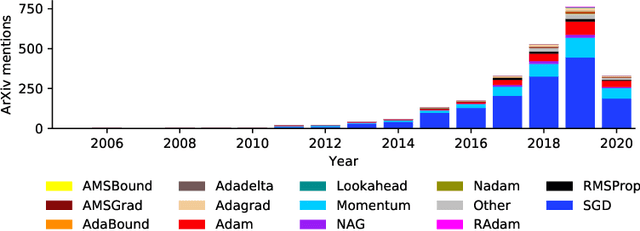

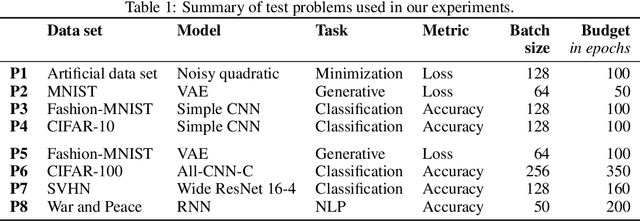

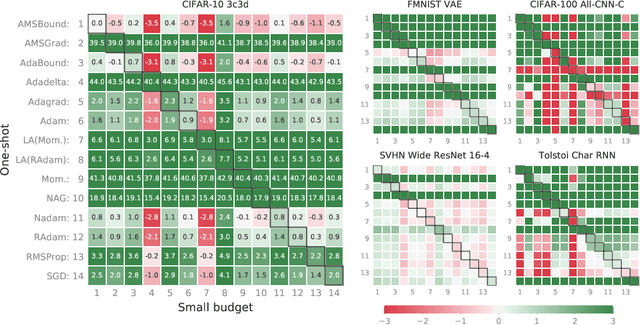

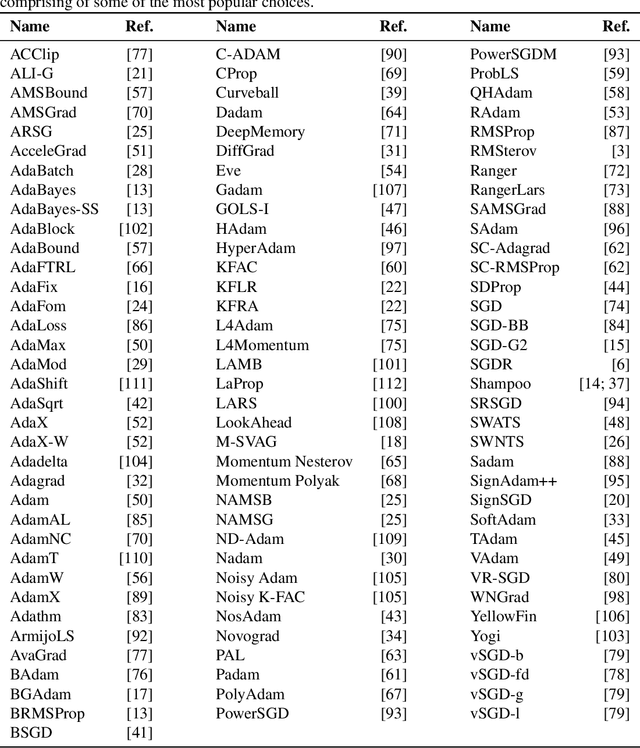

Choosing the optimizer is among the most crucial decisions of deep learning engineers, and it is not an easy one. The growing literature now lists literally hundreds of optimization methods. In the absence of clear theoretical guidance and conclusive empirical evidence, the decision is often done according to personal anecdotes. In this work, we aim to replace these anecdotes, if not with evidence, then at least with heuristics. To do so, we perform an extensive, standardized benchmark of more than a dozen particularly popular deep learning optimizers while giving a concise overview of the wide range of possible choices. Analyzing almost 35 000 individual runs, we contribute the following three points: Optimizer performance varies greatly across tasks. We observe that evaluating multiple optimizers with default parameters works approximately as well as tuning the hyperparameters of a single, fixed optimizer. While we can not identify an individual optimization method clearly dominating across all tested tasks, we identify a significantly reduced subset of specific algorithms and parameter choices that generally provided competitive results in our experiments. This subset includes popular favorites and some less well-known contenders. We have open-sourced all our experimental results, making it available to use as well-tuned baselines when evaluating novel optimization methods and therefore reducing the necessary computational efforts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge