Deriving Neural Network Design and Learning from the Probabilistic Framework of Chain Graphs

Paper and Code

Jun 30, 2020

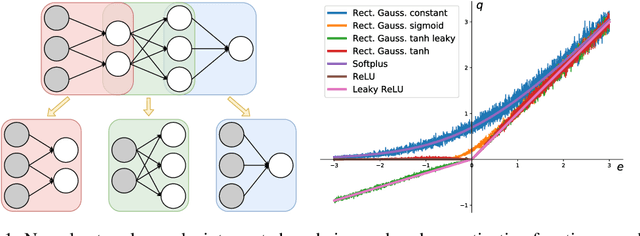

The last decade has witnessed a boom of neural network (NN) research and applications achieving state-of-the-art results in various domains. Yet, most advances on architecture and learning have been discovered empirically in a trial-and-error manner such that a more systematic exploration is difficult. Their theoretical analyses are limited and a unifying framework is absent. In this paper, we tackle this issue by identifying NNs as chain graphs (CGs) with chain components modeled as bipartite pairwise conditional random fields, and feed-forward as a form of approximate probabilistic inference. We show that from this CG interpretation we can systematically formulate an extensive range of the empirically discovered results, including various network designs (e.g., CNN, RNN, ResNet), activation functions (e.g., sigmoid, tanh, softmax, (leaky) ReLU) and regularizations (e.g., weight decay, dropout, BatchNorm). Furthermore, guided by this interpretation, we are able to derive "the preferred form" of residual block, recover the simple yet powerful IndRNN model and discover a new stochastic inference procedure: the partially collapsed feed-forward inference. We believe that our work can provide a well-founded formulation to analyze the nature and design of NNs, and can serve as a unifying theoretical framework for deep learning research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge