Dense Voxel Fusion for 3D Object Detection

Paper and Code

Mar 02, 2022

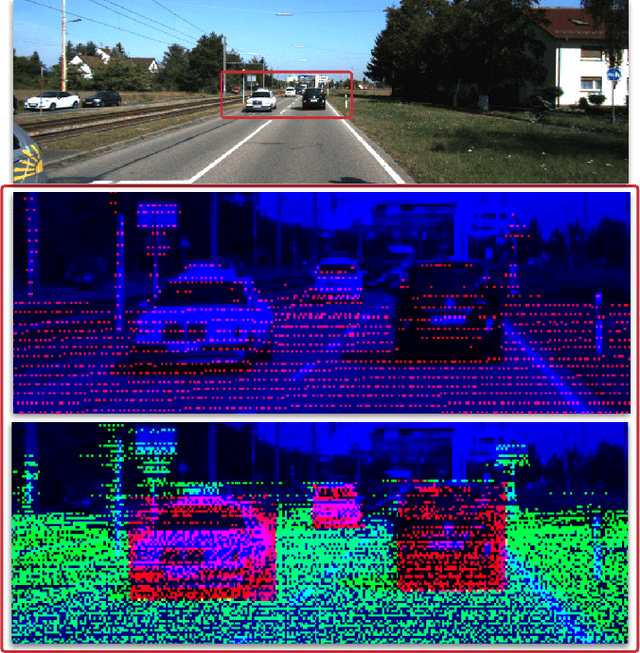

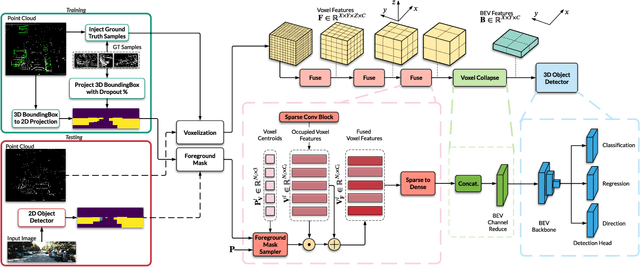

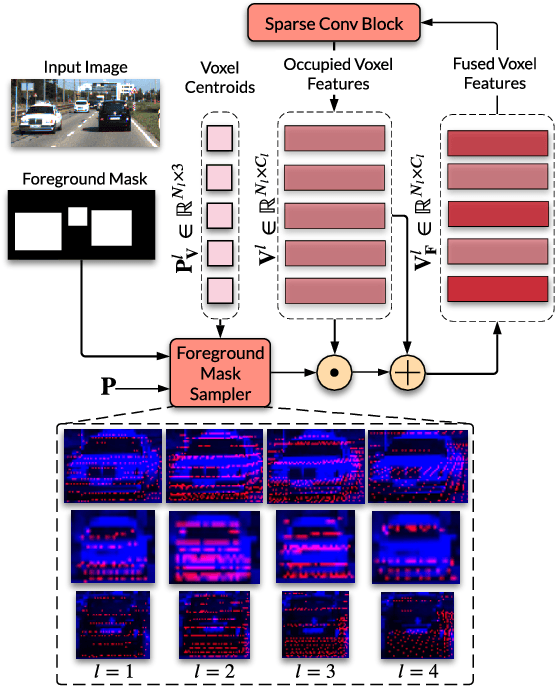

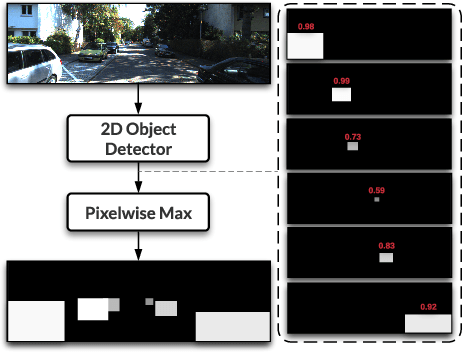

Camera and LiDAR sensor modalities provide complementary appearance and geometric information useful for detecting 3D objects for autonomous vehicle applications. However, current fusion models underperform state-of-art LiDAR-only methods on 3D object detection benchmarks. Our proposed solution, Dense Voxel Fusion (DVF) is a sequential fusion method that generates multi-scale multi-modal dense voxel feature representations, improving expressiveness in low point density regions. To enhance multi-modal learning, we train directly with ground truth 2D bounding box labels, avoiding noisy, detector-specific, 2D predictions. Additionally, we use LiDAR ground truth sampling to simulate missed 2D detections and to accelerate training convergence. Both DVF and the multi-modal training approaches can be applied to any voxel-based LiDAR backbone without introducing additional learnable parameters. DVF outperforms existing sparse fusion detectors, ranking $1^{st}$ among all published fusion methods on KITTI's 3D car detection benchmark at the time of submission and significantly improves 3D vehicle detection performance of voxel-based methods on the Waymo Open Dataset. We also show that our proposed multi-modal training strategy results in better generalization compared to training using erroneous 2D predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge