Denoising Weak Lensing Mass Maps with Deep Learning

Paper and Code

Dec 14, 2018

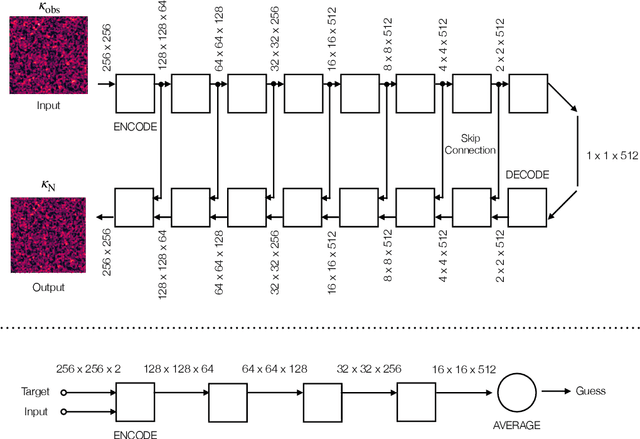

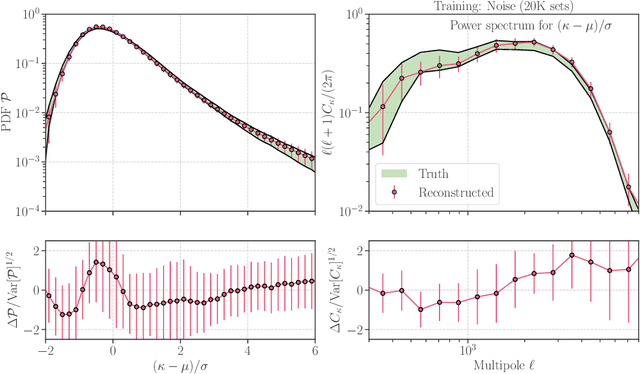

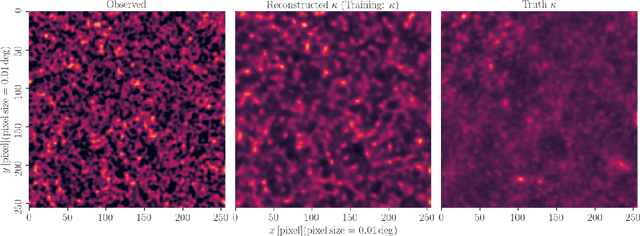

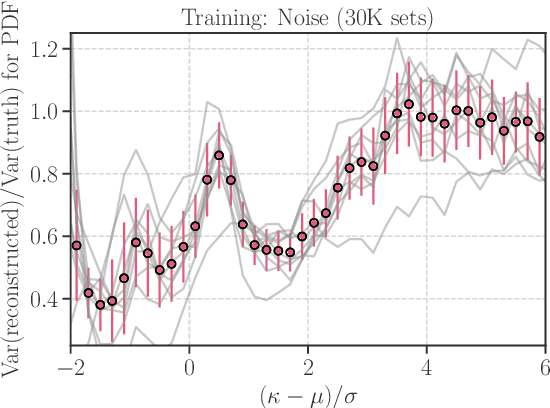

Weak gravitational lensing is a powerful probe of the large-scale cosmic matter distribution. Wide-field galaxy surveys allow us to generate the so-called weak lensing maps, but actual observations suffer from noise due to imperfect measurement of galaxy shape distortions and to the limited number density of the source galaxies. In this paper, we explore a deep-learning approach to reduce the noise. We develop an image-to-image translation method with conditional adversarial networks (CANs), which learn efficient mapping from an input noisy weak lensing map to the underlying noise field. We train the CANs using 30000 image pairs obtained from 1000 ray-tracing simulations of weak gravitational lensing. We show that the trained CANs reproduce the true one-point probability distribution function of the noiseless lensing map with a bias less than $1\sigma$ on average, where $\sigma$ is the statistical error. Since a number of model parameters are used in our CANs, our method has additional error budgets when reconstructing the summary statistics of weak lensing maps. The typical amplitude of such reconstruction error is found to be of $1-2\sigma$ level. Interestingly, pixel-by-pixel denoising for under-dense regions is less biased than denoising over-dense regions. Our deep-learning approach is complementary to existing analysis methods which focus on clustering properties and peak statistics of weak lensing maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge