Demystifying Functional Random Forests: Novel Explainability Tools for Model Transparency in High-Dimensional Spaces

Paper and Code

Aug 22, 2024

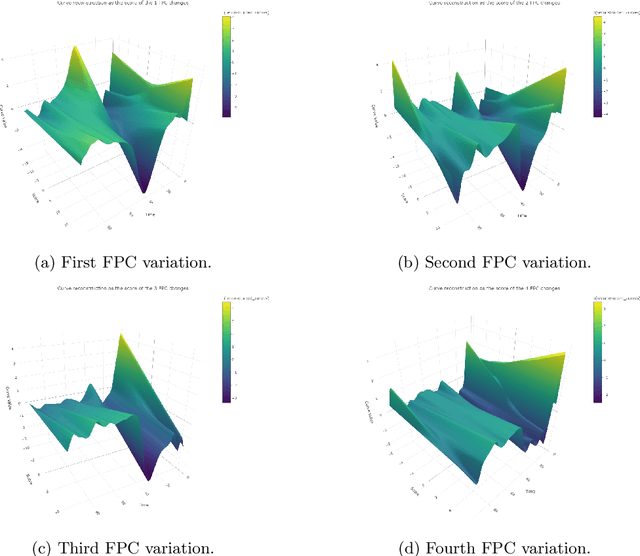

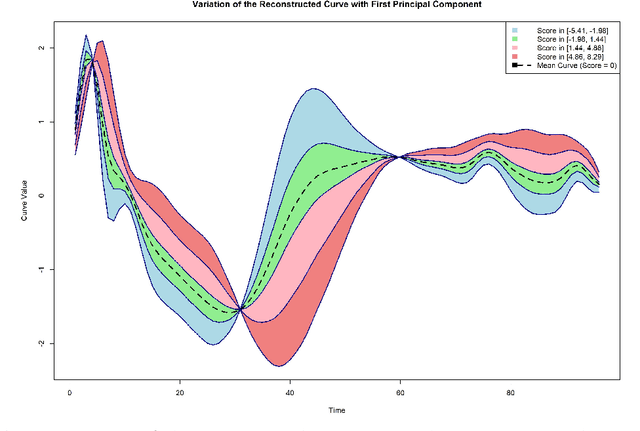

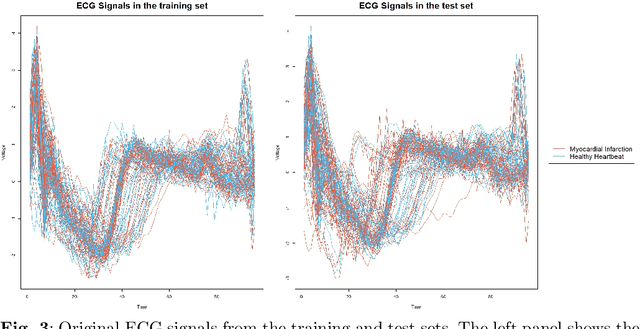

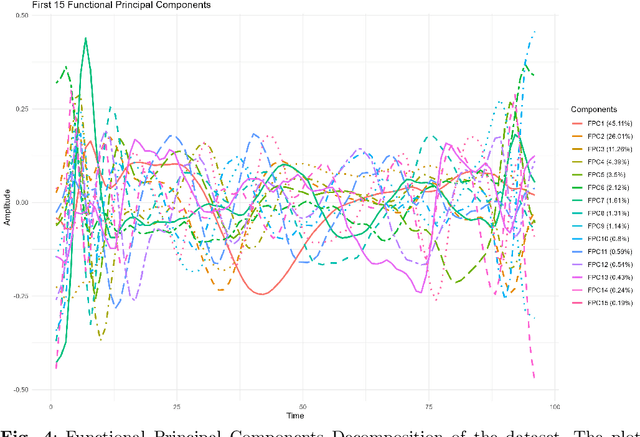

The advent of big data has raised significant challenges in analysing high-dimensional datasets across various domains such as medicine, ecology, and economics. Functional Data Analysis (FDA) has proven to be a robust framework for addressing these challenges, enabling the transformation of high-dimensional data into functional forms that capture intricate temporal and spatial patterns. However, despite advancements in functional classification methods and very high performance demonstrated by combining FDA and ensemble methods, a critical gap persists in the literature concerning the transparency and interpretability of black-box models, e.g. Functional Random Forests (FRF). In response to this need, this paper introduces a novel suite of explainability tools to illuminate the inner mechanisms of FRF. We propose using Functional Partial Dependence Plots (FPDPs), Functional Principal Component (FPC) Probability Heatmaps, various model-specific and model-agnostic FPCs' importance metrics, and the FPC Internal-External Importance and Explained Variance Bubble Plot. These tools collectively enhance the transparency of FRF models by providing a detailed analysis of how individual FPCs contribute to model predictions. By applying these methods to an ECG dataset, we demonstrate the effectiveness of these tools in revealing critical patterns and improving the explainability of FRF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge