Delve into the Performance Degradation of Differentiable Architecture Search

Paper and Code

Sep 28, 2021

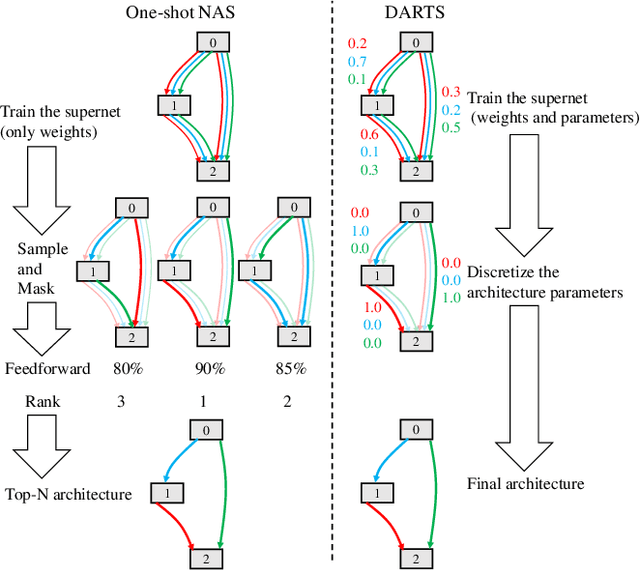

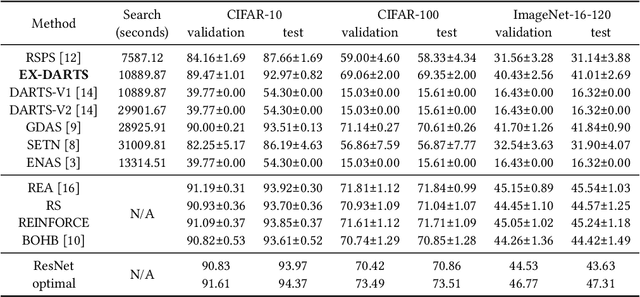

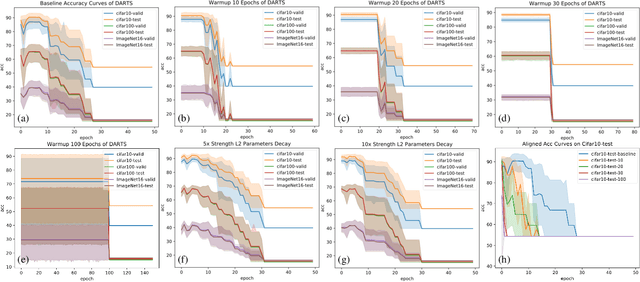

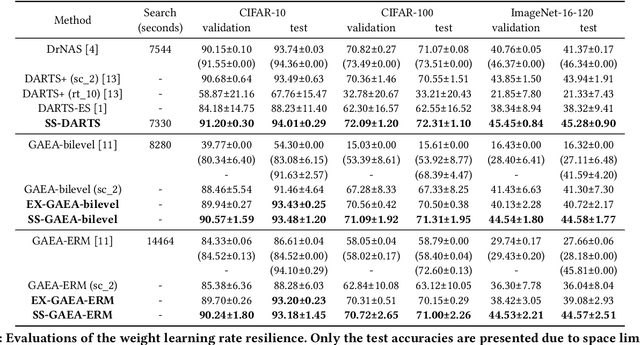

Differentiable architecture search (DARTS) is widely considered to be easy to overfit the validation set which leads to performance degradation. We first employ a series of exploratory experiments to verify that neither high-strength architecture parameters regularization nor warmup training scheme can effectively solve this problem. Based on the insights from the experiments, we conjecture that the performance of DARTS does not depend on the well-trained supernet weights and argue that the architecture parameters should be trained by the gradients which are obtained in the early stage rather than the final stage of training. This argument is then verified by exchanging the learning rate schemes of weights and parameters. Experimental results show that the simple swap of the learning rates can effectively solve the degradation and achieve competitive performance. Further empirical evidence suggests that the degradation is not a simple problem of the validation set overfitting but exhibit some links between the degradation and the operation selection bias within bilevel optimization dynamics. We demonstrate the generalization of this bias and propose to utilize this bias to achieve an operation-magnitude-based selective stop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge