DelGrad: Exact gradients in spiking networks for learning transmission delays and weights

Paper and Code

Apr 30, 2024

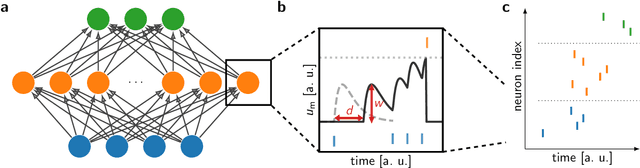

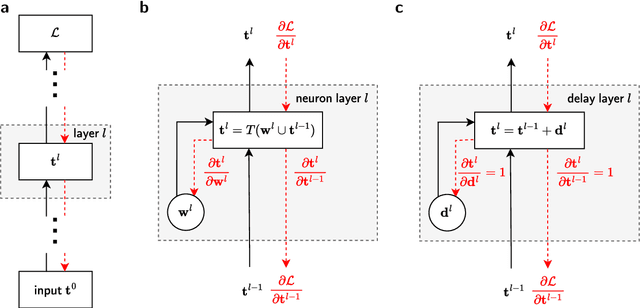

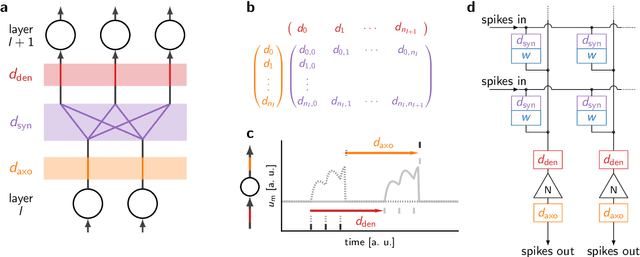

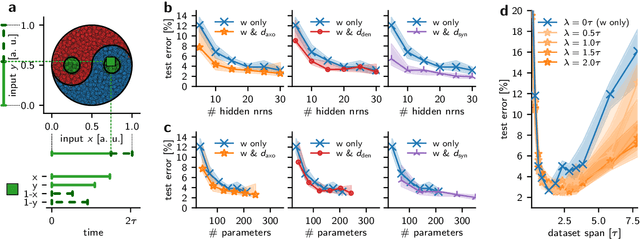

Spiking neural networks (SNNs) inherently rely on the timing of signals for representing and processing information. Transmission delays play an important role in shaping these temporal characteristics. Recent work has demonstrated the substantial advantages of learning these delays along with synaptic weights, both in terms of accuracy and memory efficiency. However, these approaches suffer from drawbacks in terms of precision and efficiency, as they operate in discrete time and with approximate gradients, while also requiring membrane potential recordings for calculating parameter updates. To alleviate these issues, we propose an analytical approach for calculating exact loss gradients with respect to both synaptic weights and delays in an event-based fashion. The inclusion of delays emerges naturally within our proposed formalism, enriching the model's search space with a temporal dimension. Our algorithm is purely based on the timing of individual spikes and does not require access to other variables such as membrane potentials. We explicitly compare the impact on accuracy and parameter efficiency of different types of delays - axonal, dendritic and synaptic. Furthermore, while previous work on learnable delays in SNNs has been mostly confined to software simulations, we demonstrate the functionality and benefits of our approach on the BrainScaleS-2 neuromorphic platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge