DEFT: Distilling Entangled Factors

Paper and Code

Feb 08, 2021

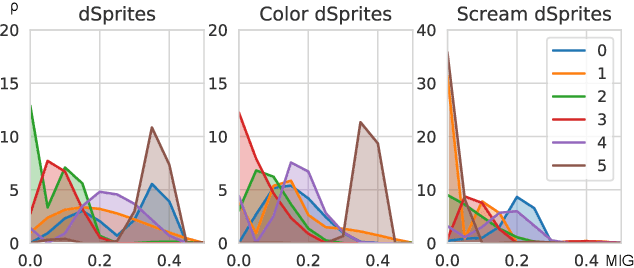

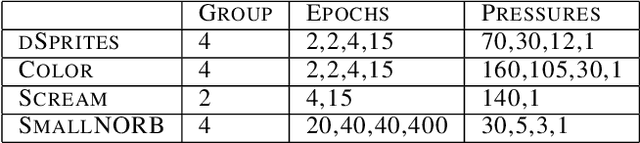

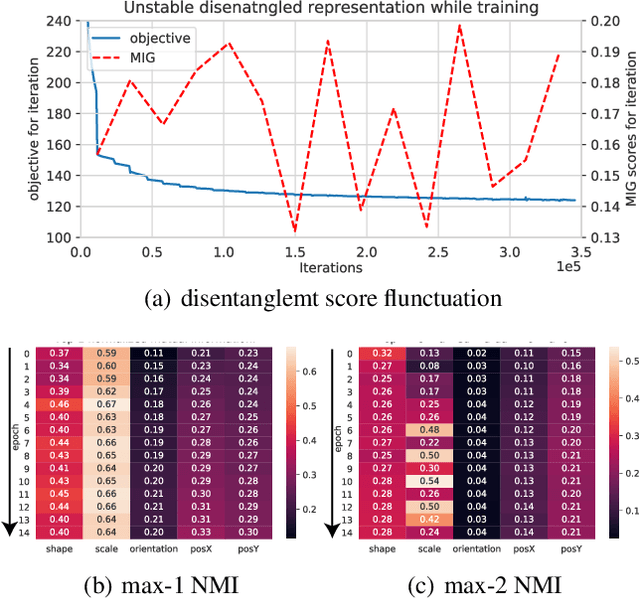

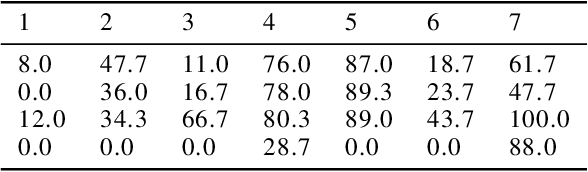

Disentanglement is a highly desirable property of representation due to its similarity with human understanding and reasoning. However, the performance of current disentanglement approaches is still unreliable and largely depends on the hyperparameter selection. Inspired by fractional distillation in chemistry, we propose DEFT, a disentanglement framework, to raise the lower limit of disentanglement approaches based on variational autoencoder. It applies a multi-stage training strategy, including multi-group encoders with different learning rates and piecewise disentanglement pressure, to stage by stage distill entangled factors. Furthermore, we provide insight into identifying the hyperparameters according to the information thresholds. We evaluate DEFT on three variants of dSprite and SmallNORB, showing robust and high-level disentanglement scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge