Defensive Collaborative Multi-task Training - Defending against Adversarial Attack towards Deep Neural Networks

Paper and Code

Jul 03, 2018

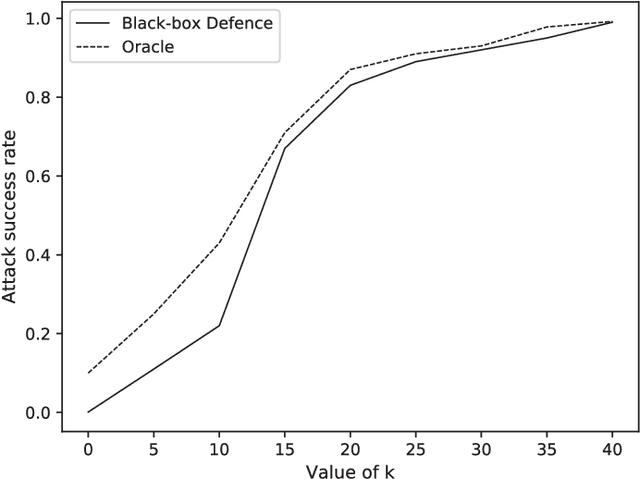

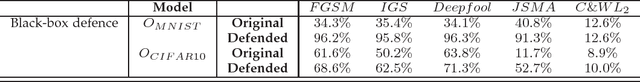

Deep neural network (DNN) has shown an impressive performance on hard perceptual problems. However, researchers found that DNN-based systems are vulnerable to adversarial examples that contain specially crafted humans-imperceptible perturbations. Such perturbations cause DNN based systems to misclassify the adversarial examples, with potentially disastrous consequences in applications where the safety or security is crucial. To address this problem, this paper proposes a novel defensive framework based on a collaborative multi-task training. The proposed defence mechanism first incorporates specific label pairs into adversarial training process to enhance the model robustness in a black-box setting. Then a novel collaborative multi-task training framework is proposed to construct a detector that identifies adversarial examples based on the pairwise relationship of the label pairs. The detector can identify and reject high confidence adversarial examples that bypass the black-box defence. The model, whose robustness has been enhanced, works reciprocally with the detector on the false-negative adversarial examples.Importantly, the proposed collaborative architecture can prevent the adversary from finding valid adversarial examples in a near-white-box setting. We evaluate our defence against four state-of-the-art attacks onMNIST and CIFAR10 datasets. Our defence method increases the classification accuracy of black-box adversarial examples up to 96.3%, and detects up to 98.7% of the high confidence adversarial examples, while only decreases the accuracy of benign example classification by 2.1% on the CIFAR10 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge