Defenses Against Multi-Sticker Physical Domain Attacks on Classifiers

Paper and Code

Jan 26, 2021

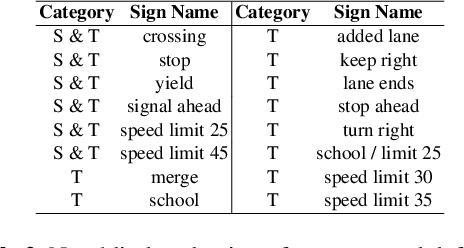

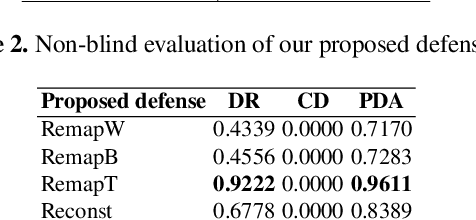

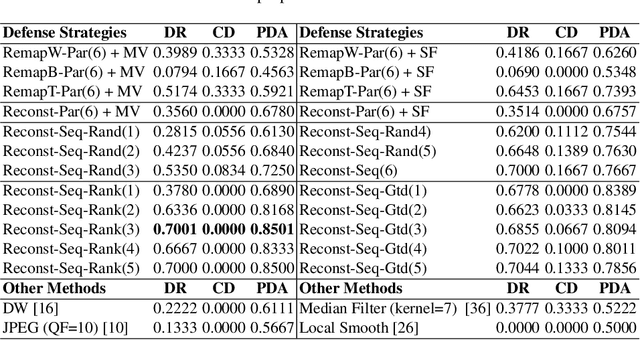

Recently, physical domain adversarial attacks have drawn significant attention from the machine learning community. One important attack proposed by Eykholt et al. can fool a classifier by placing black and white stickers on an object such as a road sign. While this attack may pose a significant threat to visual classifiers, there are currently no defenses designed to protect against this attack. In this paper, we propose new defenses that can protect against multi-sticker attacks. We present defensive strategies capable of operating when the defender has full, partial, and no prior information about the attack. By conducting extensive experiments, we show that our proposed defenses can outperform existing defenses against physical attacks when presented with a multi-sticker attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge