DeepOpt: Scalable Specification-based Falsification of Neural Networks using Black-Box Optimization

Paper and Code

Jun 03, 2021

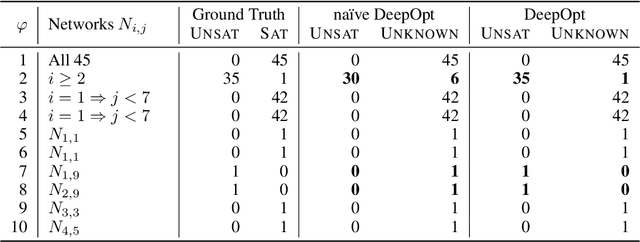

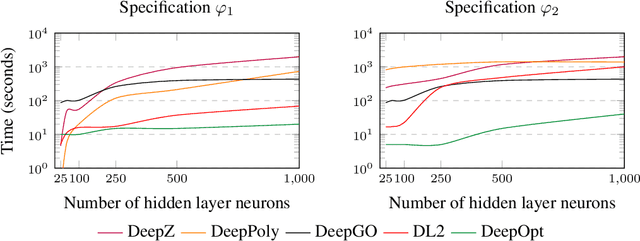

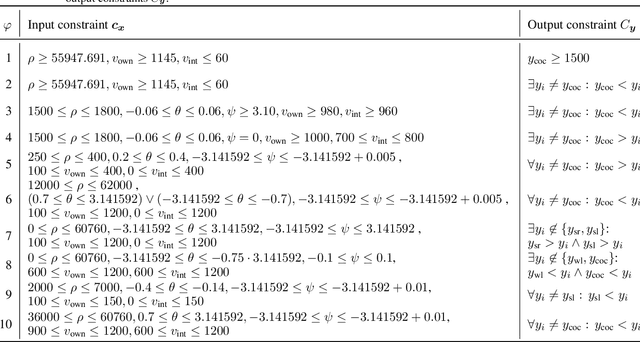

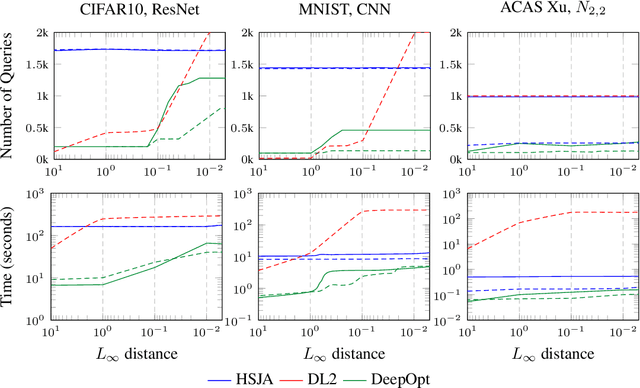

Decisions made by deep neural networks (DNNs) have a tremendous impact on the dependability of the systems that they are embedded into, which is of particular concern in the realm of safety-critical systems. In this paper we consider specification-based falsification of DNNs with the aim to support debugging and repair. We propose DeepOpt, a falsification technique based on black-box optimization, which generates counterexamples from a DNN in a refinement loop. DeepOpt can analyze input-output specifications, which makes it more general than falsification approaches that only support robustness specifications. The key idea is to algebraically combine the DNN with the input and output constraints derived from the specification. We have implemented DeepOpt and evaluated it on DNNs of varying sizes and architectures. Experimental comparisons demonstrate DeepOpt's precision and scalability; in particular, DeepOpt requires very few queries to the DNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge