DeepMLE: A Robust Deep Maximum Likelihood Estimator for Two-view Structure from Motion

Paper and Code

Oct 11, 2022

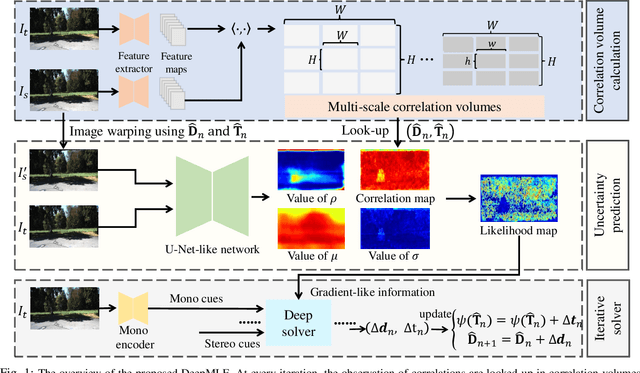

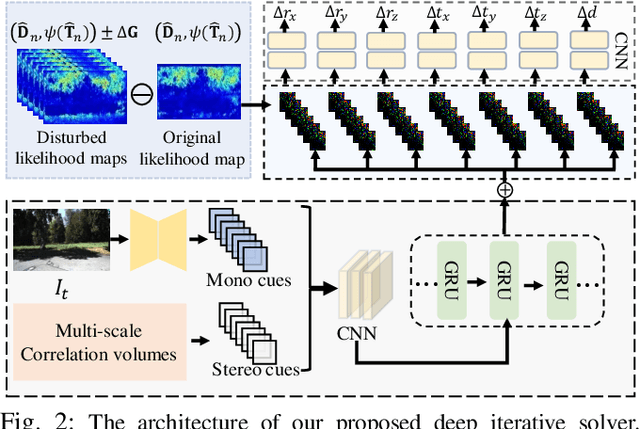

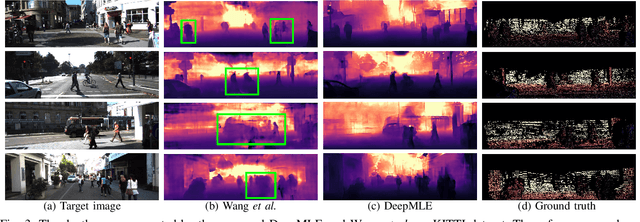

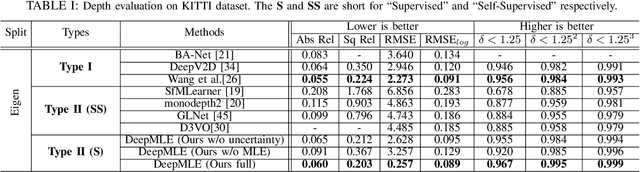

Two-view structure from motion (SfM) is the cornerstone of 3D reconstruction and visual SLAM (vSLAM). Many existing end-to-end learning-based methods usually formulate it as a brute regression problem. However, the inadequate utilization of traditional geometry model makes the model not robust in unseen environments. To improve the generalization capability and robustness of end-to-end two-view SfM network, we formulate the two-view SfM problem as a maximum likelihood estimation (MLE) and solve it with the proposed framework, denoted as DeepMLE. First, we propose to take the deep multi-scale correlation maps to depict the visual similarities of 2D image matches decided by ego-motion. In addition, in order to increase the robustness of our framework, we formulate the likelihood function of the correlations of 2D image matches as a Gaussian and Uniform mixture distribution which takes the uncertainty caused by illumination changes, image noise and moving objects into account. Meanwhile, an uncertainty prediction module is presented to predict the pixel-wise distribution parameters. Finally, we iteratively refine the depth and relative camera pose using the gradient-like information to maximize the likelihood function of the correlations. Extensive experimental results on several datasets prove that our method significantly outperforms the state-of-the-art end-to-end two-view SfM approaches in accuracy and generalization capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge