Deep Video Deblurring: The Devil is in the Details

Paper and Code

Sep 26, 2019

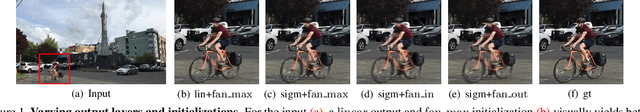

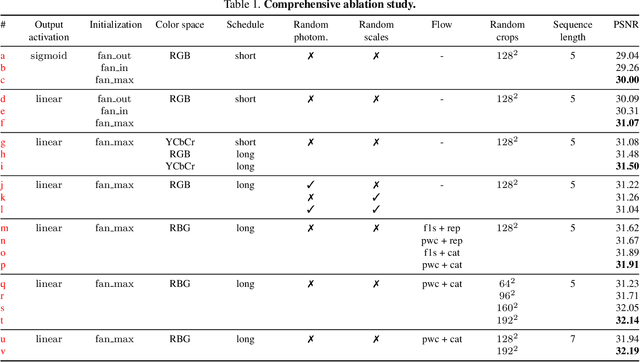

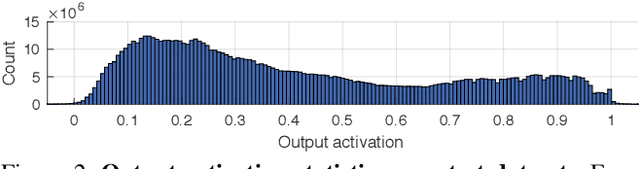

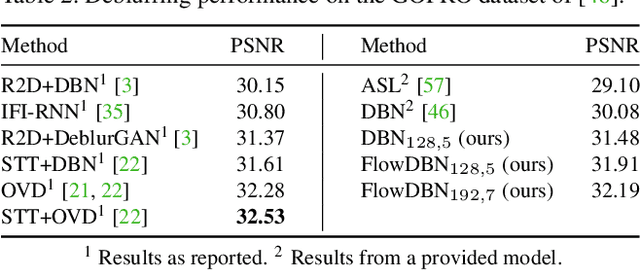

Video deblurring for hand-held cameras is a challenging task, since the underlying blur is caused by both camera shake and object motion. State-of-the-art deep networks exploit temporal information from neighboring frames, either by means of spatio-temporal transformers or by recurrent architectures. In contrast to these involved models, we found that a simple baseline CNN can perform astonishingly well when particular care is taken w.r.t. the details of model and training procedure. To that end, we conduct a comprehensive study regarding these crucial details, uncovering extreme differences in quantitative and qualitative performance. Exploiting these details allows us to boost the architecture and training procedure of a simple baseline CNN by a staggering 3.15dB, such that it becomes highly competitive w.r.t. cutting-edge networks. This raises the question whether the reported accuracy difference between models is always due to technical contributions or also subject to such orthogonal, but crucial details.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge