Deep Single Shot Musical Instrument Identification using Scalograms

Paper and Code

Aug 08, 2021

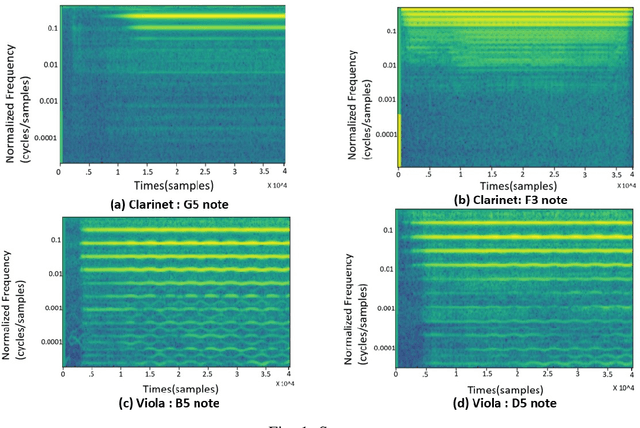

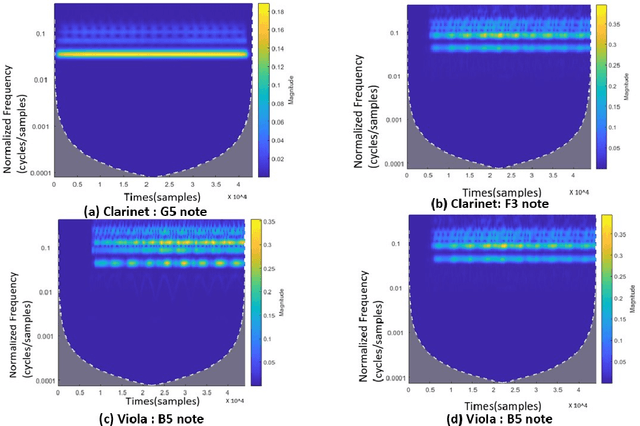

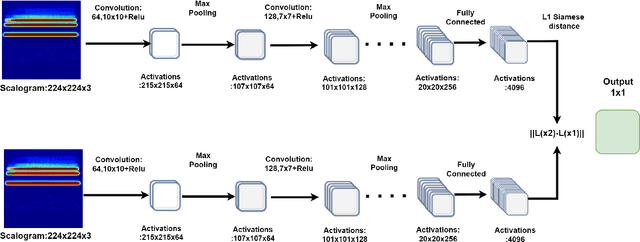

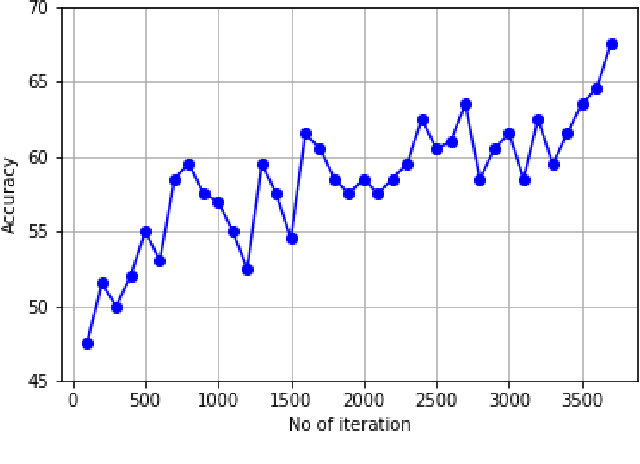

Musical Instrument Identification has for long had a reputation of being one of the most ill-posed problems in the field of Musical Information Retrieval(MIR). Despite several robust attempts to solve the problem, a timeline spanning over the last five odd decades, the problem remains an open conundrum. In this work, the authors take on a further complex version of the traditional problem statement. They attempt to solve the problem with minimal data available - one audio excerpt per class. We propose to use a convolutional Siamese network and a residual variant of the same to identify musical instruments based on the corresponding scalograms of their audio excerpts. Our experiments and corresponding results obtained on two publicly available datasets validate the superiority of our algorithm by $\approx$ 3\% over the existing synonymous algorithms in present-day literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge