Deep SCNN-based Real-time Object Detection for Self-driving Vehicles Using LiDAR Temporal Data

Paper and Code

Jan 12, 2020

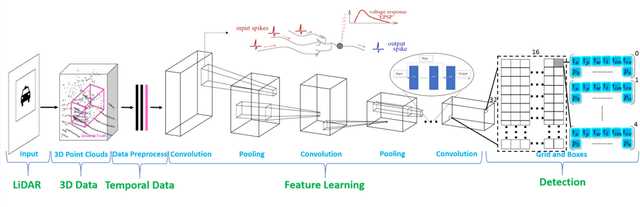

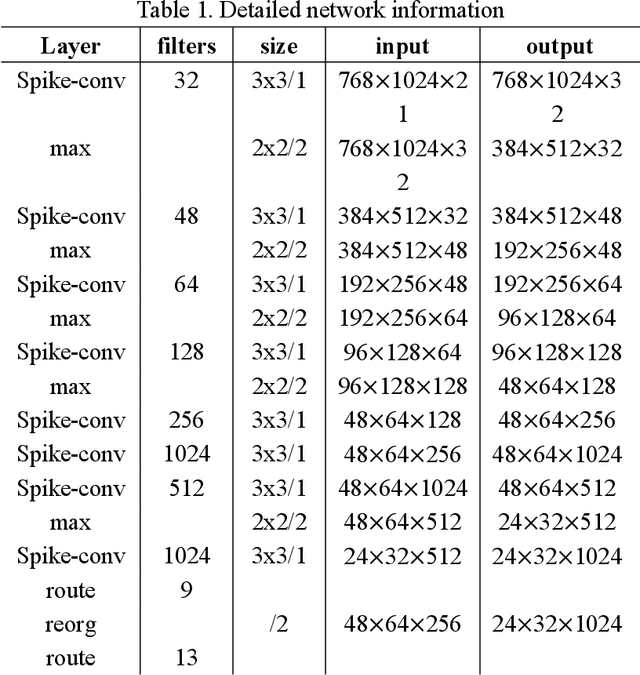

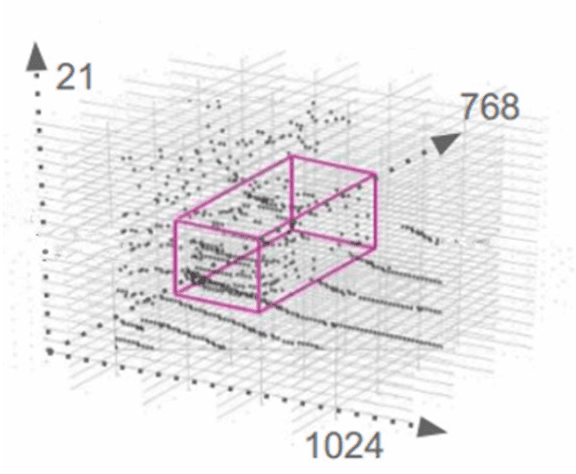

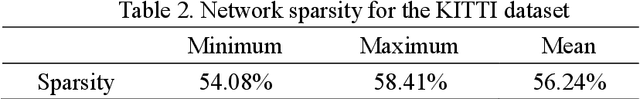

Real-time accurate detection of three-dimensional (3D) objects is a fundamental necessity for self-driving vehicles. Traditional computer-vision approaches are based on convolutional neural networks (CNN). Although the accuracy of using CNN on the KITTI vision benchmark dataset has resulted in great success, few related studies have examined its energy consumption requirements. Spiking neural networks (SNN) and spiking-CNNs (SCNN) have exhibited lower energy consumption rates than CNN. However, few studies have used SNNs or SCNNs to detect objects. Therefore, we developed a novel data preprocessing layer that translates 3D point-cloud spike times into input and employs SCNN on a YOLOv2 architecture to detect objects via spiking signals. Moreover, we present an estimation method for energy consumption and network sparsity. The results demonstrate that the proposed networks ran with a much higher frame rate of 35.7 fps on an NVIDIA GTX 1080i graphical processing unit. Additionally, the proposed networks with skip connections showed better performance than those without skip connections. Both reached state-of-the-art detection accuracy on the KITTI dataset, and our networks consumed an average (low) energy of 0.585 mJ with a mean sparsity of 56.24%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge