Deep Residual Mixture Models

Paper and Code

Jun 22, 2020

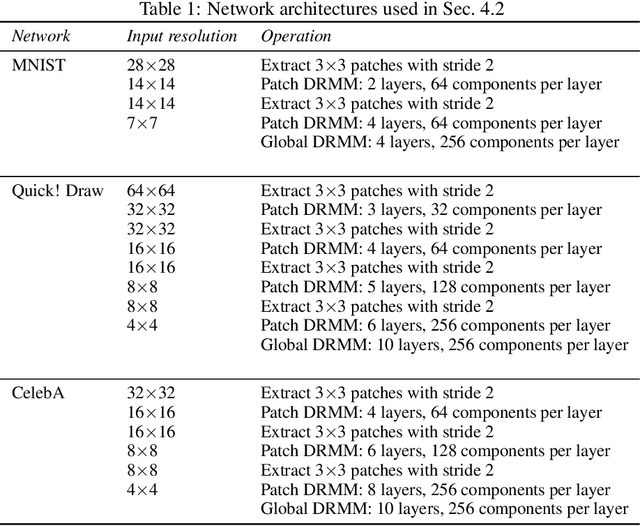

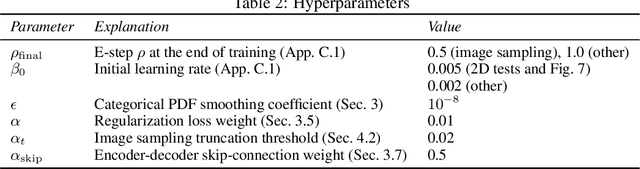

We propose Deep Residual Mixture Models (DRMMs) which share the many desirable properties of Gaussian Mixture Models (GMMs), but with a crucial benefit: The modeling capacity of a DRMM can grow exponentially with depth, while the number of model parameters only grows quadratically. DRMMs allow for extremely flexible conditional sampling, as the conditioning variables can be freely selected without re-training the model, and it is easy to combine the sampling with priors and (in)equality constraints. DRMMs should be applicable where GMMs are traditionally used, but as demonstrated in our experiments, DRMMs scale better to complex, high-dimensional data. We demonstrate the approach in constrained multi-limb inverse kinematics and image completion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge