Deep Reinforcement Learning with Stage Incentive Mechanism for Robotic Trajectory Planning

Paper and Code

Sep 25, 2020

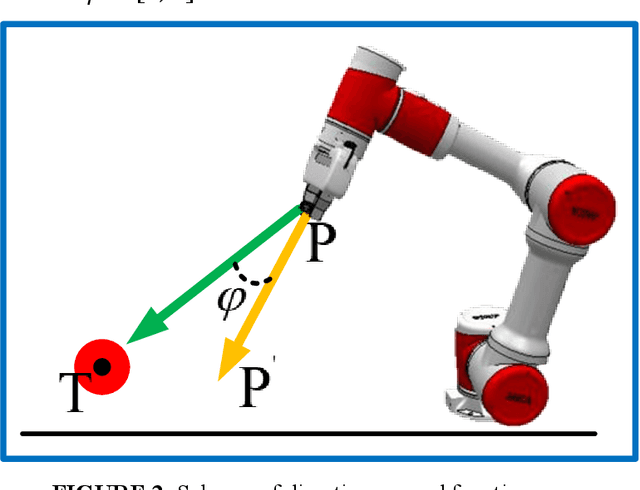

To improve the efficiency of deep reinforcement learning (DRL) based methods for robot manipulator trajectory planning in random working environment. Different from the traditional sparse reward function, we present three dense reward functions in this paper. Firstly, posture reward function is proposed to accelerate the learning process with a more reasonable trajectory by modeling the distance and direction constraints, which can reduce the blindness of exploration. Secondly, to improve the stability, a reward function at stride reward is proposed by modeling the distance and movement distance of joints constraints, it can make the learning process more stable. In order to further improve learning efficiency, we are inspired by the cognitive process of human behavior and propose a stage incentive mechanism, including hard stage incentive reward function and soft stage incentive reward function. Extensive experiments show that the soft stage incentive reward function proposed is able to improve convergence rate by up to 46.9% with the state-of-the-art DRL methods. The percentage increase in convergence mean reward is 4.4%~15.5% and the percentage decreases with respect to standard deviation by 21.9%~63.2%. In the evaluation, the success rate of trajectory planning for robot manipulator is up to 99.6%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge