Deep Reinforcement Learning for Wireless Scheduling with Multiclass Services

Paper and Code

Nov 27, 2020

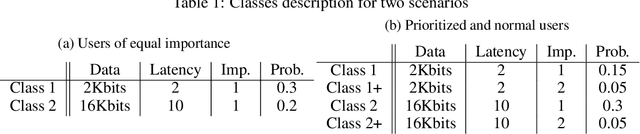

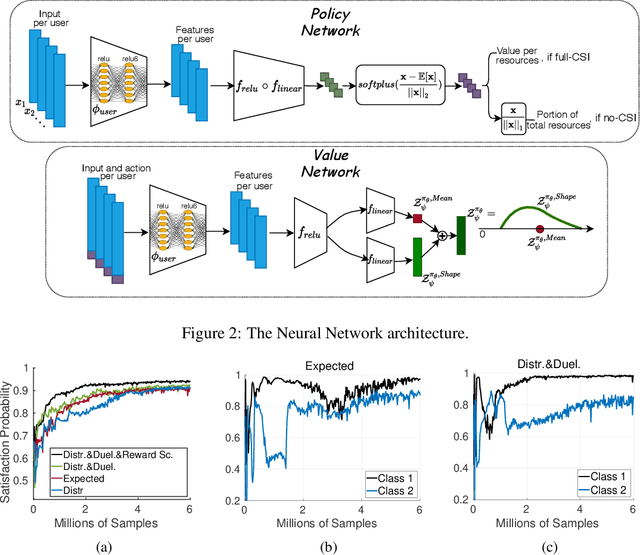

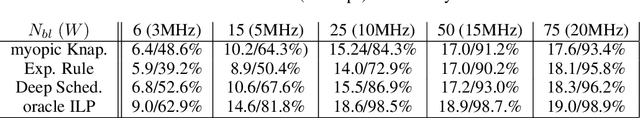

In this paper, we investigate the problem of scheduling and resource allocation over a time varying set of clients with heterogeneous demands.In this context, a service provider has to schedule traffic destined to users with different classes of requirements and to allocate bandwidth resources over time as a means to efficiently satisfy service demands within a limited time horizon. This is a highly intricate problem, in particular in wireless communication systems, and solutions may involve tools stemming from diverse fields, including combinatorics and constrained optimization. Although recent work has successfully proposed solutions based on Deep Reinforcement Learning (DRL), the challenging setting of heterogeneous user traffic and demands has not been addressed. We propose a deep deterministic policy gradient algorithm that combines state-of-the-art techniques, namely Distributional RL and Deep Sets, to train a model for heterogeneous traffic scheduling. We test on diverse scenarios with different time dependence dynamics, users' requirements, and resources available, demonstrating consistent results using both synthetic and real data. We evaluate the algorithm on a wireless communication setting using both synthetic and real data and show significant gains in terms of Quality of Service (QoS) defined by the classes, against state-of-the-art conventional algorithms from combinatorics, optimization and scheduling metric(e.g. Knapsack, Integer Linear Programming, Frank-Wolfe, Exponential Rule).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge