Deep Reinforcement Learning for Time Scheduling in RF-Powered Backscatter Cognitive Radio Networks

Paper and Code

Oct 03, 2018

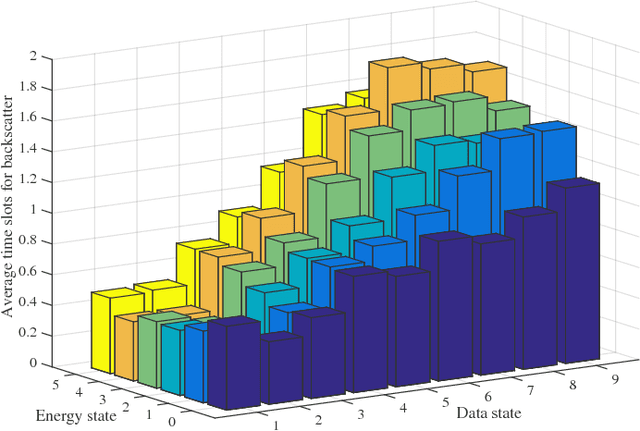

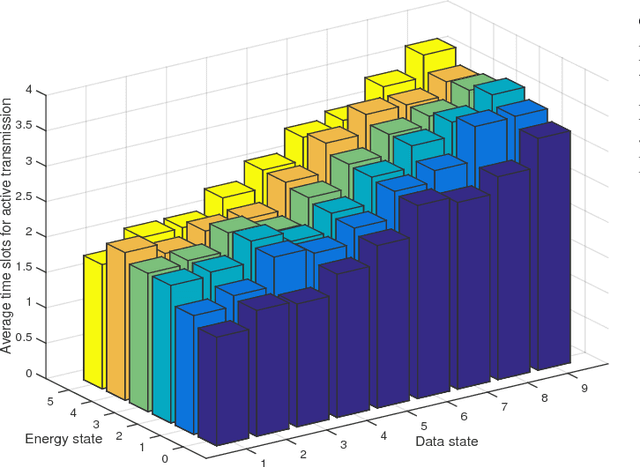

In an RF-powered backscatter cognitive radio network, multiple secondary users communicate with a secondary gateway by backscattering or harvesting energy and actively transmitting their data depending on the primary channel state. To coordinate the transmission of multiple secondary transmitters, the secondary gateway needs to schedule the backscattering time, energy harvesting time, and transmission time among them. However, under the dynamics of the primary channel and the uncertainty of the energy state of the secondary transmitters, it is challenging for the gateway to find a time scheduling mechanism which maximizes the total throughput. In this paper, we propose to use the deep reinforcement learning algorithm to derive an optimal time scheduling policy for the gateway. Specifically, to deal with the problem with large state and action spaces, we adopt a Double Deep-Q Network (DDQN) that enables the gateway to learn the optimal policy. The simulation results clearly show that the proposed deep reinforcement learning algorithm outperforms non-learning schemes in terms of network throughput.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge