Deep Reinforcement Learning Aided Packet-Routing For Aeronautical Ad-Hoc Networks Formed by Passenger Planes

Paper and Code

Oct 28, 2021

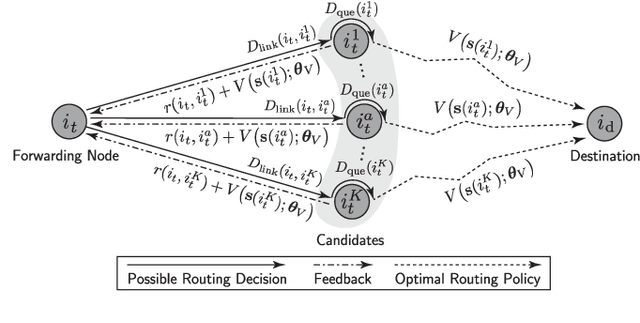

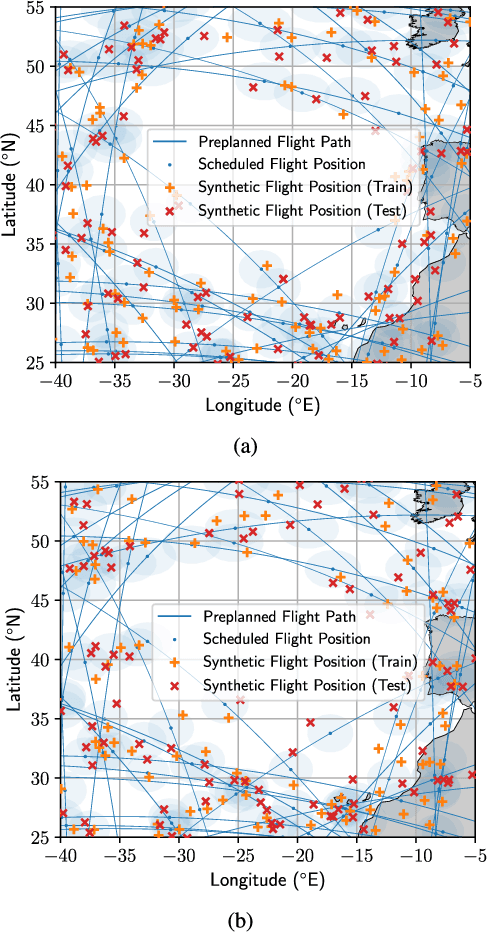

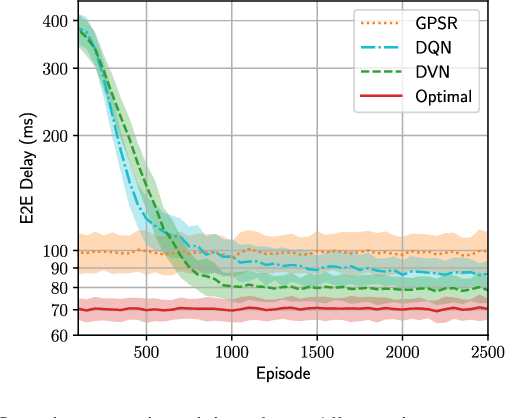

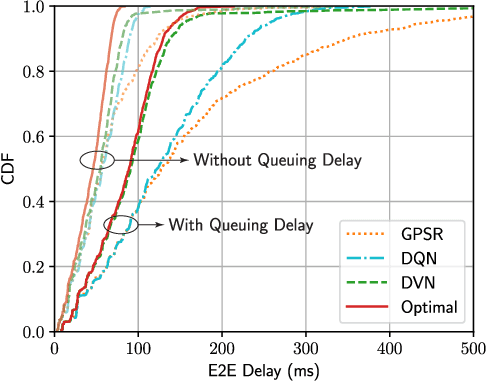

Data packet routing in aeronautical ad-hoc networks (AANETs) is challenging due to their high-dynamic topology. In this paper, we invoke deep reinforcement learning for routing in AANETs aiming at minimizing the end-to-end (E2E) delay. Specifically, a deep Q-network (DQN) is conceived for capturing the relationship between the optimal routing decision and the local geographic information observed by the forwarding node. The DQN is trained in an offline manner based on historical flight data and then stored by each airplane for assisting their routing decisions during flight. To boost the learning efficiency and the online adaptability of the proposed DQN-routing, we further exploit the knowledge concerning the system's dynamics by using a deep value network (DVN) conceived with a feedback mechanism. Our simulation results show that both DQN-routing and DVN-routing achieve lower E2E delay than the benchmark protocol, and DVN-routing performs similarly to the optimal routing that relies on perfect global information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge