Deep Predictive Models in Interactive Music

Paper and Code

Jun 01, 2018

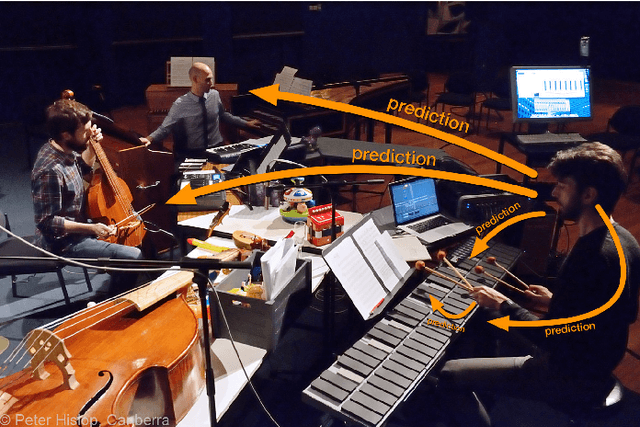

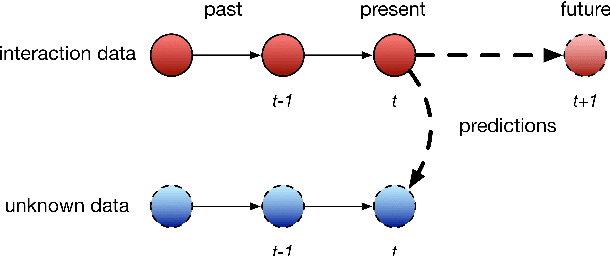

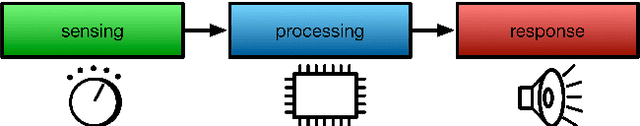

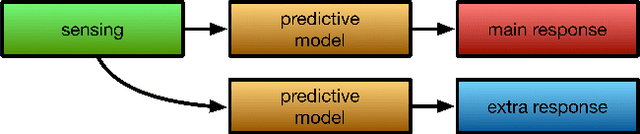

Musical performance requires prediction to operate instruments, to perform in groups and to improvise. We argue, with reference to a number of digital music instruments (DMIs), including two of our own, that predictive machine learning models can help interactive systems to understand their temporal context and ensemble behaviour. We also discuss how recent advances in deep learning highlight the role of prediction in DMIs, by allowing data-driven predictive models with a long memory of past states. We advocate for predictive musical interaction, where a predictive model is embedded in a musical interface, assisting users by predicting unknown states of musical processes. We propose a framework for characterising prediction as relating to the instrumental sound, ongoing musical process, or between members of an ensemble. Our framework shows that different musical interface design configurations lead to different types of prediction. We show that our framework accommodates deep generative models, as well as models for predicting gestural states, or other high-level musical information. We apply our framework to examples from our recent work and the literature, and discuss the benefits and challenges revealed by these systems as well as musical use-cases where prediction is a necessary component.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge