Deep Point-to-Plane Registration by Efficient Backpropagation for Error Minimizing Function

Paper and Code

Jul 14, 2022

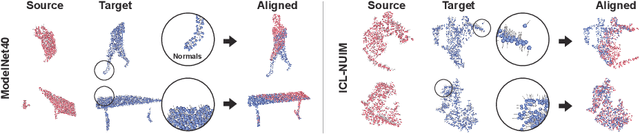

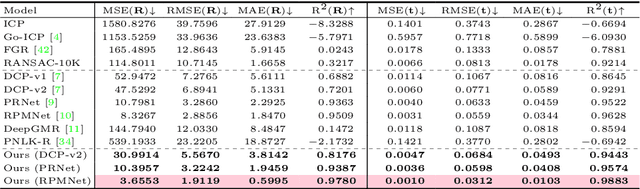

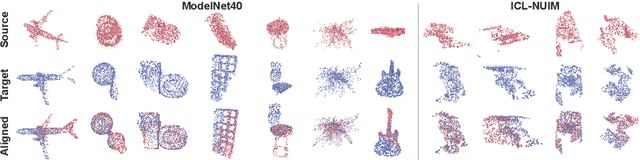

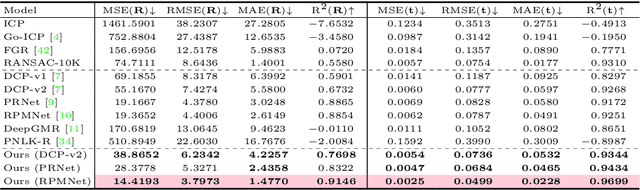

Traditional algorithms of point set registration minimizing point-to-plane distances often achieve a better estimation of rigid transformation than those minimizing point-to-point distances. Nevertheless, recent deep-learning-based methods minimize the point-to-point distances. In contrast to these methods, this paper proposes the first deep-learning-based approach to point-to-plane registration. A challenging part of this problem is that a typical solution for point-to-plane registration requires an iterative process of accumulating small transformations obtained by minimizing a linearized energy function. The iteration significantly increases the size of the computation graph needed for backpropagation and can slow down both forward and backward network evaluations. To solve this problem, we consider the estimated rigid transformation as a function of input point clouds and derive its analytic gradients using the implicit function theorem. The analytic gradient that we introduce is independent of how the error minimizing function (i.e., the rigid transformation) is obtained, thus allowing us to calculate both the rigid transformation and its gradient efficiently. We implement the proposed point-to-plane registration module over several previous methods that minimize point-to-point distances and demonstrate that the extensions outperform the base methods even with point clouds with noise and low-quality point normals estimated with local point distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge