Deep Learning for Longitudinal Gross Tumor Volume Segmentation in MRI-Guided Adaptive Radiotherapy for Head and Neck Cancer

Paper and Code

Dec 01, 2024

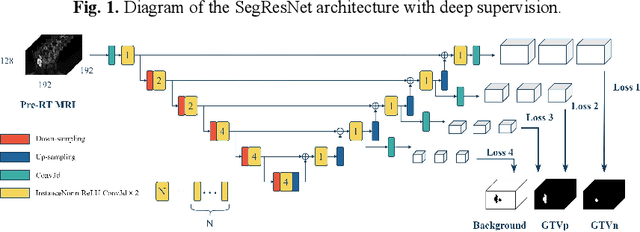

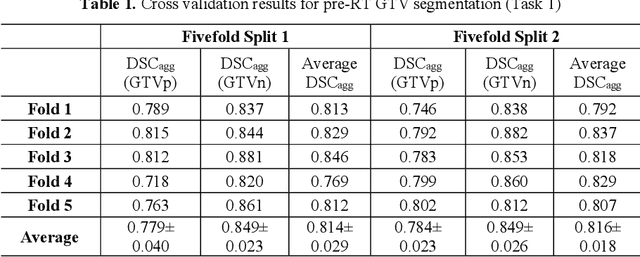

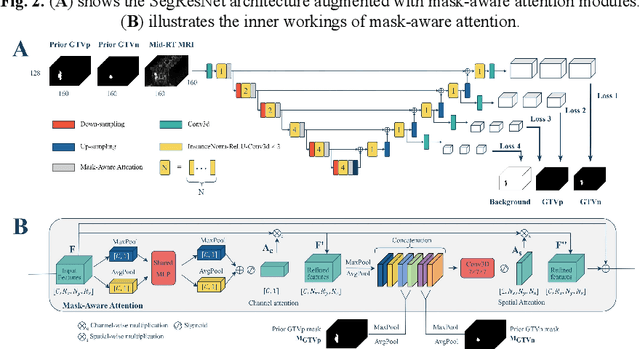

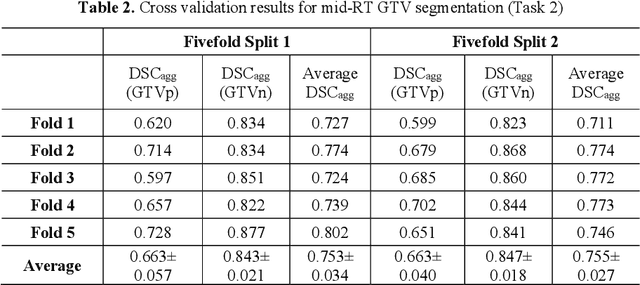

Accurate segmentation of gross tumor volume (GTV) is essential for effective MRI-guided adaptive radiotherapy (MRgART) in head and neck cancer. However, manual segmentation of the GTV over the course of therapy is time-consuming and prone to interobserver variability. Deep learning (DL) has the potential to overcome these challenges by automatically delineating GTVs. In this study, our team, $\textit{UW LAIR}$, tackled the challenges of both pre-radiotherapy (pre-RT) (Task 1) and mid-radiotherapy (mid-RT) (Task 2) tumor volume segmentation. To this end, we developed a series of DL models for longitudinal GTV segmentation. The backbone of our models for both tasks was SegResNet with deep supervision. For Task 1, we trained the model using a combined dataset of pre-RT and mid-RT MRI data, which resulted in the improved aggregated Dice similarity coefficient (DSCagg) on an internal testing set compared to models trained solely on pre-RT MRI data. In Task 2, we introduced mask-aware attention modules, enabling pre-RT GTV masks to influence intermediate features learned from mid-RT data. This attention-based approach yielded slight improvements over the baseline method, which concatenated mid-RT MRI with pre-RT GTV masks as input. In the final testing phase, the ensemble of 10 pre-RT segmentation models achieved an average DSCagg of 0.794, with 0.745 for primary GTV (GTVp) and 0.844 for metastatic lymph nodes (GTVn) in Task 1. For Task 2, the ensemble of 10 mid-RT segmentation models attained an average DSCagg of 0.733, with 0.607 for GTVp and 0.859 for GTVn, leading us to $\textbf{achieve 1st place}$. In summary, we presented a collection of DL models that could facilitate GTV segmentation in MRgART, offering the potential to streamline radiation oncology workflows. Our code and model weights are available at https://github.com/xtie97/HNTS-MRG24-UWLAIR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge