Deep Learning Based Sphere Decoding

Paper and Code

Jul 06, 2018

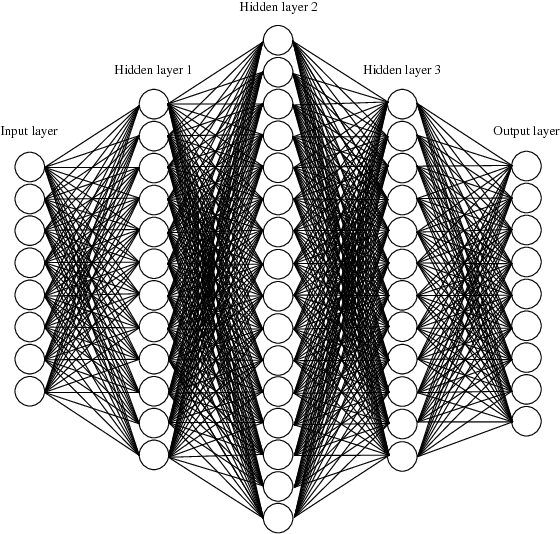

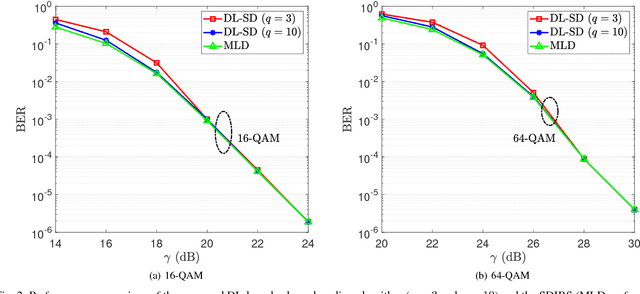

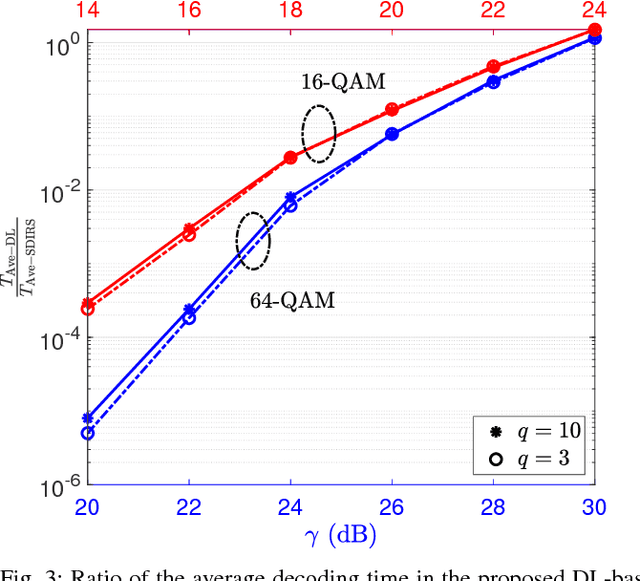

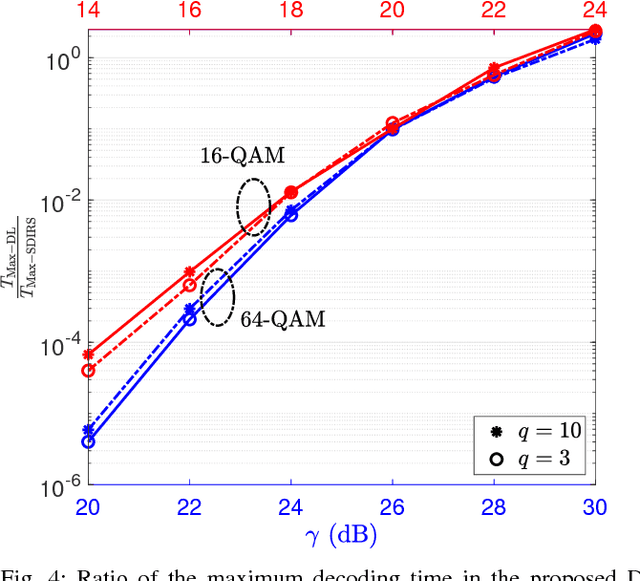

In this paper, a deep learning (DL)-based sphere decoding algorithm is proposed, where the radius of the decoding hypersphere is learnt by a deep neural network (DNN). The performance achieved by the proposed algorithm is very close to the optimal maximum likelihood decoding (MLD) over a wide range of signal-to-noise ratios (SNRs), while the computational complexity, compared to existing sphere decoding variants, is significantly reduced. This improvement is attributed to DNN's ability of intelligently learning the radius of the hypersphere used in decoding. The expected complexity of the proposed DL-based algorithm is analytically derived and compared with existing ones. It is shown that the number of lattice points inside the decoding hypersphere drastically reduces in the DL- based algorithm in both the average and worst-case senses. The effectiveness of the proposed algorithm is shown through simulation for high-dimensional multiple-input multiple-output (MIMO) systems, using high-order modulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge