Deep Iterative Frame Interpolation for Full-frame Video Stabilization

Paper and Code

Sep 05, 2019

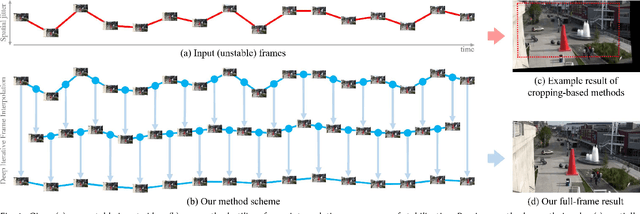

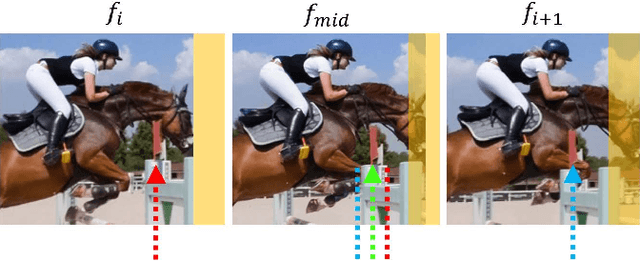

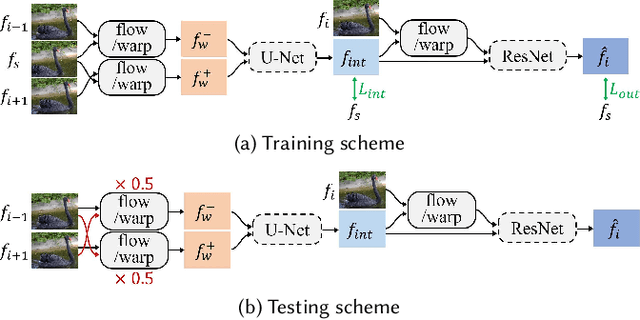

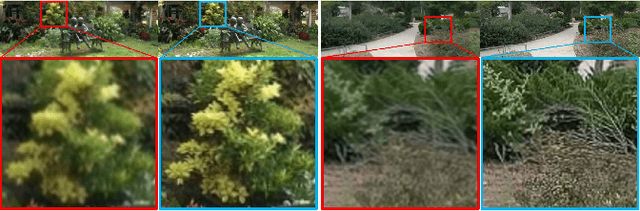

Video stabilization is a fundamental and important technique for higher quality videos. Prior works have extensively explored video stabilization, but most of them involve cropping of the frame boundaries and introduce moderate levels of distortion. We present a novel deep approach to video stabilization which can generate video frames without cropping and low distortion. The proposed framework utilizes frame interpolation techniques to generate in between frames, leading to reduced inter-frame jitter. Once applied in an iterative fashion, the stabilization effect becomes stronger. A major advantage is that our framework is end-to-end trainable in an unsupervised manner. In addition, our method is able to run in near real-time (15 fps). To the best of our knowledge, this is the first work to propose an unsupervised deep approach to full-frame video stabilization. We show the advantages of our method through quantitative and qualitative evaluations comparing to the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge