Deep Graph Generators

Paper and Code

Jun 07, 2020

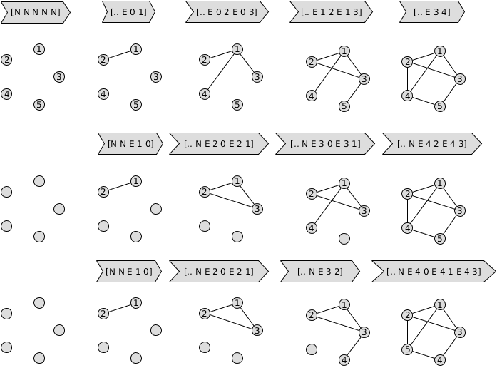

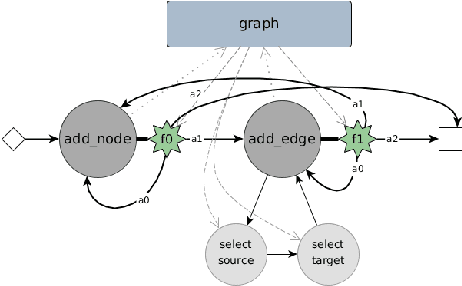

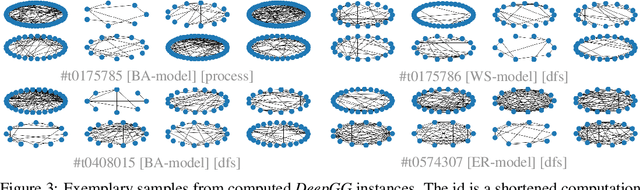

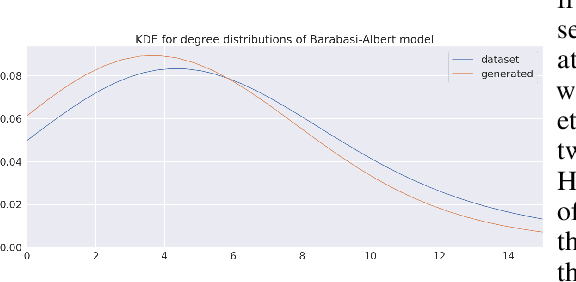

Learning distributions of graphs can be used for automatic drug discovery, molecular design, complex network analysis and much more. We present an improved framework for learning generative models of graphs based on the idea of deep state machines. To learn state transition decisions we use a set of graph and node embedding techniques as memory of the state machine. Our analysis is based on learning the distribution of random graph generators for which we provide statistical tests to determine which properties can be learned and how well the original distribution of graphs is represented. We show that the design of the state machine favors specific distributions. Models of graphs of size up to 150 vertices are learned. Code and parameters are publicly available to reproduce our results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge