Deep frequency principle towards understanding why deeper learning is faster

Paper and Code

Jul 28, 2020

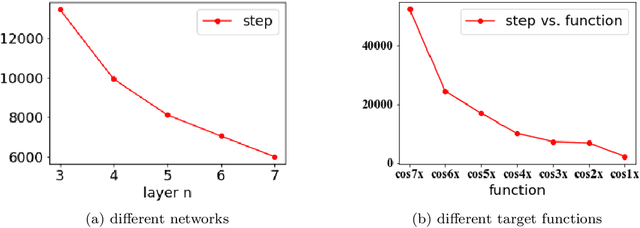

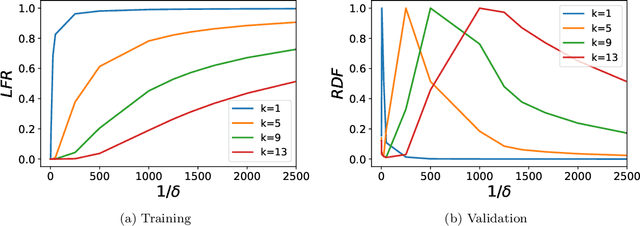

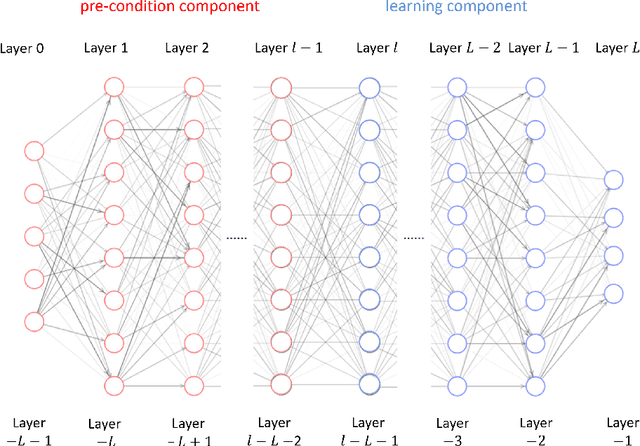

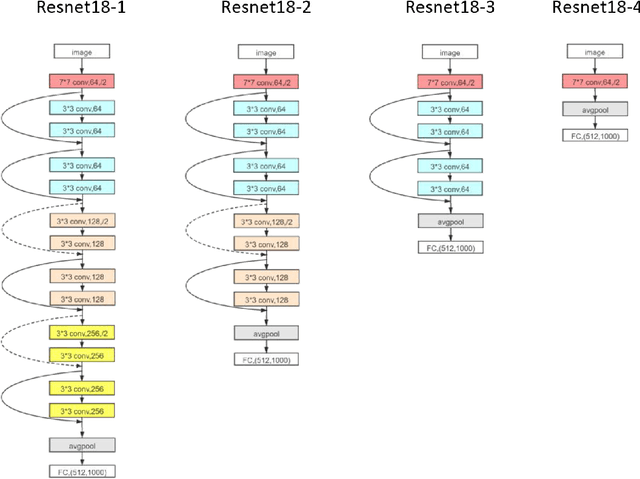

Understanding the effect of depth in deep learning is a critical problem. In this work, we utilize the Fourier analysis to empirically provide a promising mechanism to understand why deeper learning is faster. To this end, we separate a deep neural network into two parts, one is a pre-condition component and the other is a learning component, in which the output of the pre-condition one is the input of the learning one. Based on experiments of deep networks and real dataset, we propose a deep frequency principle, that is, the effective target function for a deeper hidden layer has a bias towards a function with more low frequency during the training. Therefore, the learning component effectively learns a lower frequency function if the pre-condition component has more layers. Due to the well-studied frequency principle, i.e., deep neural networks learn lower frequency functions faster, the deep frequency principle provides a reasonable explanation to why deeper learning is faster. We believe these empirical studies would be valuable for future theoretical studies of the effect of depth in deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge