Deep Context-Aware Novelty Detection

Paper and Code

Jun 01, 2020

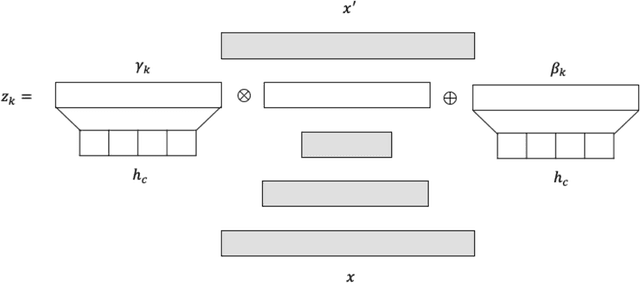

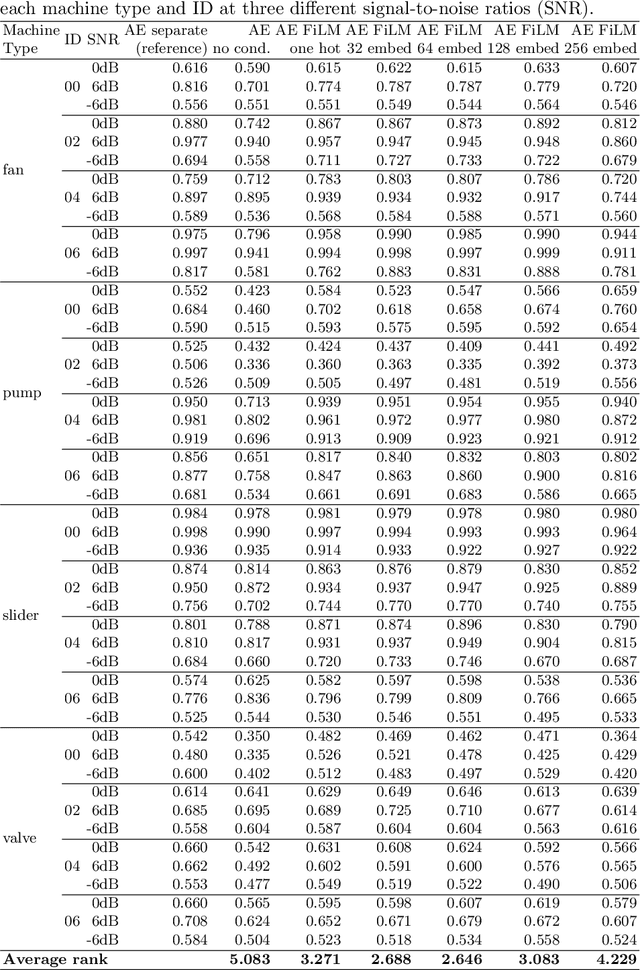

A common assumption of novelty detection is that the distribution of both "normal" and "novel" data are static. However, this is often not the case in scenarios where data evolves over time, or when the definition of normal and novel depends on contextual information, leading to changes in these distributions. This can lead to significant difficulties when attempting to train a model on datasets where the distribution of normal data in one scenario is similar to that of novel data in another scenario. In this paper we propose a context-aware approach to novelty detection for deep autoencoders. We create a semi-supervised network architecture which utilises auxiliary labels in order to reveal contextual information and allows the model to adapt to a variety of normal and novel scenarios. We evaluate our approach on both synthetic image data and real world audio data displaying these characteristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge